[ad_1]

Picture by Creator

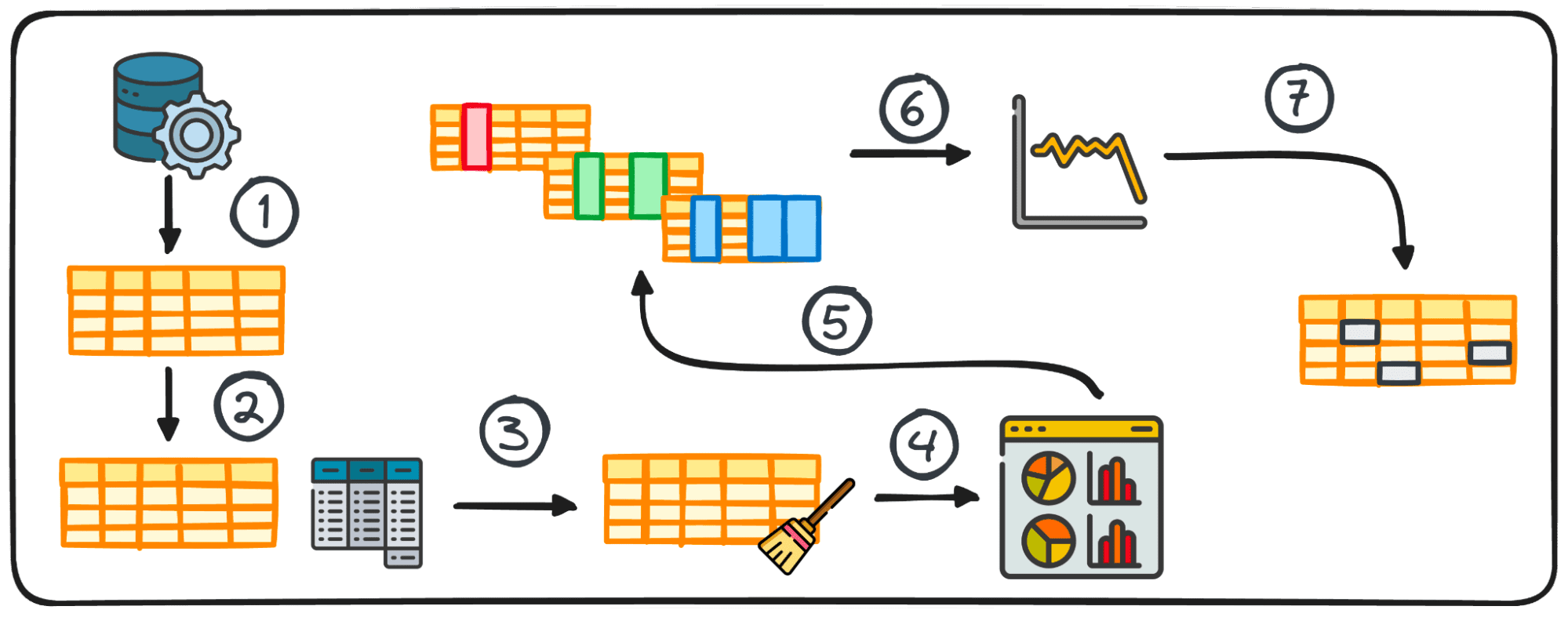

Exploratory Knowledge Evaluation (or EDA) stands as a core section inside the Knowledge Evaluation Course of, emphasizing an intensive investigation right into a dataset’s internal particulars and traits.

Its major intention is to uncover underlying patterns, grasp the dataset’s construction, and determine any potential anomalies or relationships between variables.

By performing EDA, knowledge professionals test the standard of the info. Subsequently, it ensures that additional evaluation is predicated on correct and insightful data, thereby lowering the probability of errors in subsequent levels.

So let’s attempt to perceive collectively what are the essential steps to carry out EDA for our subsequent Knowledge Science undertaking.

I’m fairly certain you will have already heard the phrase:

Rubbish in, Rubbish out

Enter knowledge high quality is at all times a very powerful issue for any profitable knowledge undertaking.

Sadly, most knowledge is grime at first. By the method of Exploratory Knowledge Evaluation, a dataset that’s practically usable will be remodeled into one that’s absolutely usable.

It is necessary to make clear that it isn’t a magic answer for purifying any dataset. Nonetheless, quite a few EDA methods are efficient at addressing a number of typical points encountered inside datasets.

So… let’s be taught essentially the most fundamental steps based on Ayodele Oluleye in his ebook Exploratory Knowledge Evaluation with Python Cookbook.

Step 1: Knowledge Assortment

The preliminary step in any knowledge undertaking is having the info itself. This primary step is the place knowledge is gathered from numerous sources for subsequent evaluation.

2. Abstract Statistics

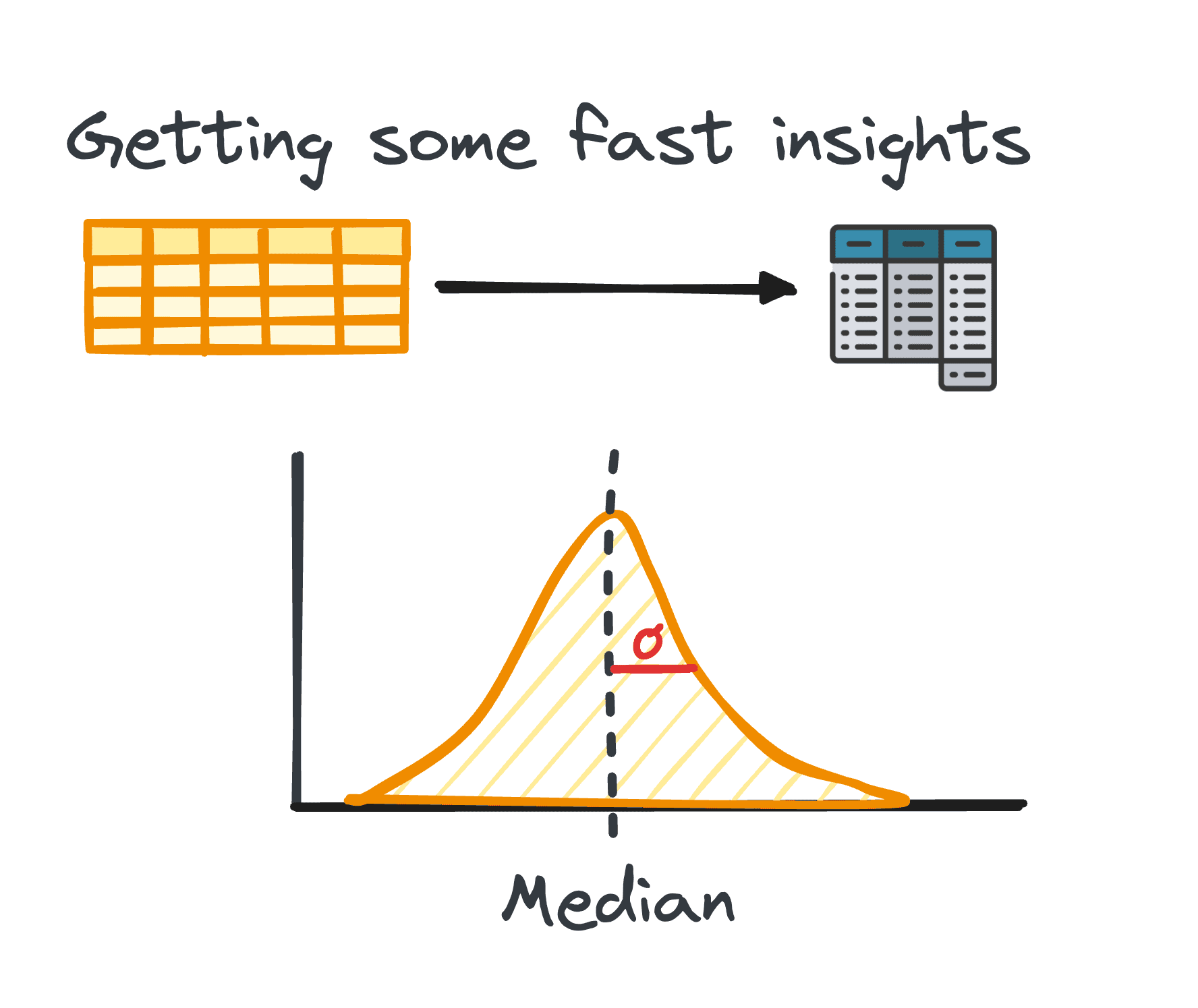

In knowledge evaluation, dealing with tabular knowledge is sort of widespread. In the course of the evaluation of such knowledge, it is usually needed to achieve speedy insights into the info’s patterns and distribution.

These preliminary insights function a base for additional exploration and in-depth evaluation and are referred to as abstract statistics.

They provide a concise overview of the dataset’s distribution and patterns, encapsulated by way of metrics similar to imply, median, mode, variance, customary deviation, vary, percentiles, and quartiles.

Picture by Creator

3. Making ready Knowledge for EDA

Earlier than beginning our exploration, knowledge often must be ready for additional evaluation. Knowledge preparation includes reworking, aggregating, or cleansing knowledge utilizing Python’s pandas library to go well with the wants of your evaluation.

This step is tailor-made to the info’s construction and might embody grouping, appending, merging, sorting, categorizing, and coping with duplicates.

In Python, engaging in this activity is facilitated by the pandas library by way of its numerous modules.

The preparation course of for tabular knowledge does not adhere to a common technique; as a substitute, it is formed by the precise traits of our knowledge, together with its rows, columns, knowledge varieties, and the values it comprises.

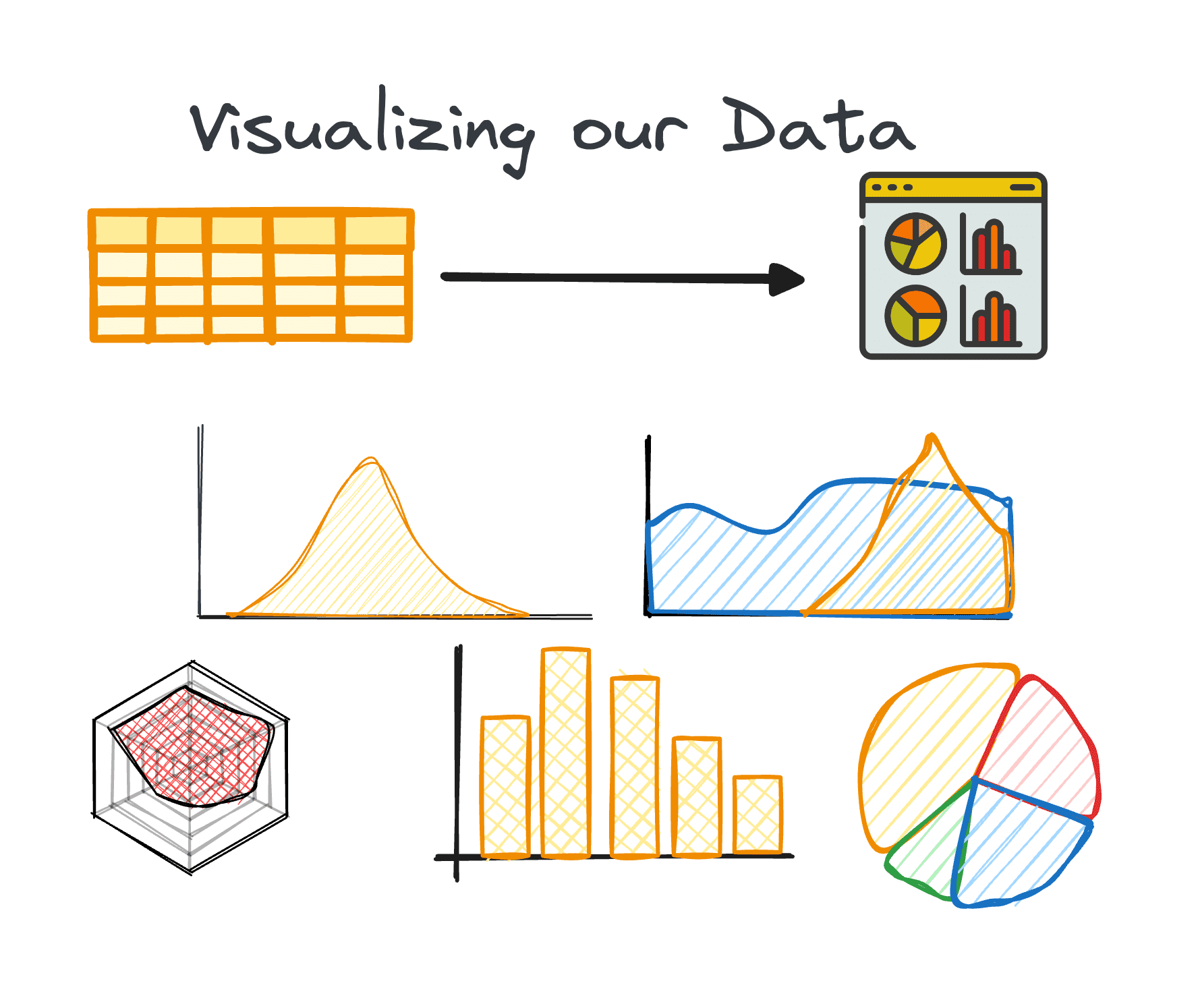

4. Visualizing Knowledge

Visualization is a core part of EDA, making complicated relationships and developments inside the dataset simply understandable.

Utilizing the fitting charts may help us determine developments inside an enormous dataset and discover hidden patterns or outliers. Python gives completely different libraries for knowledge visualization, together with Matplotlib or Seaborn amongst others.

Picture by Creator

5. Performing Variable Evaluation:

Variable evaluation will be both univariate, bivariate, or multivariate. Every of them offers insights into the distribution and correlations between the dataset’s variables. Methods differ relying on the variety of variables analyzed:

Univariate

The principle focus in univariate evaluation is on inspecting every variable inside our dataset by itself. Throughout this evaluation, we will uncover insights such because the median, mode, most, vary, and outliers.

Any such evaluation is relevant to each categorical and numerical variables.

Bivariate

Bivariate evaluation goals to disclose insights between two chosen variables and focuses on understanding the distribution and relationship between these two variables.

As we analyze two variables on the similar time, one of these evaluation will be trickier. It may possibly embody three completely different pairs of variables: numerical-numerical, numerical-categorical, and categorical-categorical.

Multivariate

A frequent problem with massive datasets is the simultaneous evaluation of a number of variables. Regardless that univariate and bivariate evaluation strategies supply worthwhile insights, that is often not sufficient for analyzing datasets containing a number of variables (often greater than 5).

This difficulty of managing high-dimensional knowledge, often known as the curse of dimensionality, is well-documented. Having a lot of variables will be advantageous because it permits the extraction of extra insights. On the similar time, this benefit will be in opposition to us because of the restricted variety of methods out there for analyzing or visualizing a number of variables concurrently.

6. Analyzing Time Sequence Knowledge

This step focuses on the examination of information factors collected over common time intervals. Time sequence knowledge applies to knowledge that change over time. This principally means our dataset consists of a bunch of information factors which might be recorded over common time intervals.

Once we analyze time sequence knowledge, we will sometimes uncover patterns or developments that repeat over time and current a temporal seasonality. Key parts of time sequence knowledge embody developments, seasonal differences, cyclical variations, and irregular variations or noise.

7. Coping with Outliers and Lacking Values

Outliers and lacking values can skew evaluation outcomes if not correctly addressed. This is the reason we should always at all times think about a single section to take care of them.

Figuring out, eradicating, or changing these knowledge factors is essential for sustaining the integrity of the dataset’s evaluation. Subsequently, it’s extremely necessary to handle them earlier than begin analyzing our knowledge.

- Outliers are knowledge factors that current a major deviation from the remaining. They often current unusually excessive or low values.

- Lacking values are the absence of information factors comparable to a particular variable or commentary.

A important preliminary step in coping with lacking values and outliers is to grasp why they’re current within the dataset. This understanding usually guides the collection of essentially the most appropriate technique for addressing them. Further elements to think about are the traits of the info and the precise evaluation that shall be performed.

EDA not solely enhances the dataset’s readability but in addition allows knowledge professionals to navigate the curse of dimensionality by offering methods for managing datasets with quite a few variables.

By these meticulous steps, EDA with Python equips analysts with the instruments essential to extract significant insights from knowledge, laying a strong basis for all subsequent knowledge evaluation endeavors.

Josep Ferrer is an analytics engineer from Barcelona. He graduated in physics engineering and is at the moment working within the Knowledge Science subject utilized to human mobility. He’s a part-time content material creator centered on knowledge science and expertise. You may contact him on LinkedIn, Twitter or Medium.

[ad_2]

Source link