[ad_1]

President Biden met with seven synthetic intelligence corporations final week to hunt voluntary safeguards for AI merchandise and focus on future regulatory prospects.

Now, 4 of these corporations have fashioned a brand new coalition aimed toward selling accountable AI growth and establishing business requirements within the face of accelerating authorities and societal scrutiny.

“In the present day, Anthropic, Google, Microsoft, and OpenAI are asserting the formation of the Frontier Mannequin Discussion board, a brand new business physique targeted on guaranteeing secure and accountable growth of frontier AI fashions,” a statement on OpenAI’s web site learn.

In what it says is for the good thing about the “total AI ecosystem,” the Frontier Mannequin Discussion board will leverage the technical and operational experience of its member corporations, the assertion mentioned. The group seeks to advance technical requirements, together with benchmarks and evaluations, and develop a public library of options.

Core aims of the coalition embody:

- Advancing AI security analysis to advertise accountable growth of frontier fashions, decrease dangers, and allow impartial, standardized evaluations of capabilities and security.

- Figuring out finest practices for the accountable growth and deployment of frontier fashions, serving to the general public perceive the character, capabilities, limitations, and influence of the know-how.

- Collaborating with policymakers, teachers, civil society and corporations to share information about belief and security dangers.

- Supporting efforts to develop purposes that may assist meet society’s best challenges, resembling local weather change mitigation and adaptation, early most cancers detection and prevention, and combating cyber threats.

The Discussion board describes ‘frontier fashions’ as large-scale machine studying fashions that outperform the capabilities of present top-tier fashions in engaging in a various array of duties.

The group plans to sort out accountable AI growth with a deal with three key areas: figuring out finest practices, advancing AI security analysis, and facilitating data sharing amongst corporations and governments.

“The Discussion board will coordinate analysis to progress these efforts in areas resembling adversarial robustness, mechanistic interpretability, scalable oversight, impartial analysis entry, emergent behaviors and anomaly detection,” it mentioned.

“The Discussion board will coordinate analysis to progress these efforts in areas resembling adversarial robustness, mechanistic interpretability, scalable oversight, impartial analysis entry, emergent behaviors and anomaly detection,” it mentioned.

Different future actions listed embody establishing an advisory board to information the group’s technique and priorities and in addition forming establishments like a constitution, governance, and funding with a working group and govt board. The Discussion board indicated its assist for present business efforts just like the Partnership on AI and MLCommons and plans to seek the advice of with civil society and governments on “significant methods to collaborate.”

Although the coalition has simply 4 members, it’s open to organizations that actively develop and implement these frontier fashions, present a agency dedication to their security via each technical and institutional means, and are able to additional the Discussion board’s aims via energetic participation in joint initiatives, in line with an inventory of membership necessities.

“The Discussion board welcomes organizations that meet these standards to hitch this effort and collaborate on guaranteeing the secure and accountable growth of frontier AI fashions,” the group wrote.

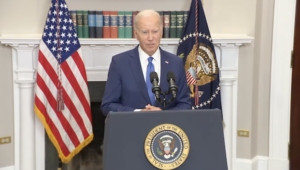

These targets are nonetheless considerably nebulous, as had been the outcomes of the White Home assembly final week. In his remarks relating to the assembly, President Biden acknowledged the transformative influence of AI and the necessity for accountable innovation with security as a precedence. He additionally famous the necessity for bipartisan laws to control the gathering of non-public knowledge, safeguard democracy, and handle the potential disruption of jobs and industries brought on by superior AI. To attain this, Biden mentioned a typical framework to manipulate AI growth is required.

President Biden delivers remarks following a gathering with seven distinguished AI corporations final week. (Supply: White Home)

“Social media has proven us the hurt that highly effective know-how can do with out the proper safeguards in place. And I’ve mentioned on the State of the Union that Congress must move bipartisan laws to impose strict limits on private knowledge assortment, ban focused ads to youngsters, and require corporations to place well being and security first,” the President mentioned, noting that we should stay “clear-eyed and vigilant concerning the threats rising applied sciences that may pose – do not need to, however can pose – to our democracy and our values.”

Critics have mentioned these makes an attempt at self-regulation on the a part of the key AI gamers might be creating a brand new technology of know-how monopolies.

President of AI writing platform Jasper Shane Orlick instructed EnterpriseAI in an e mail that there’s a want for an ongoing, in-depth engagement between the federal government and AI innovators.

“AI will have an effect on all points of life and society—and with any know-how this complete, the federal government should play a job in defending us from unintended penalties and establishing a single supply of fact surrounding the essential questions these new improvements create, together with what the parameters round secure AI really are,” Orlick mentioned.

He continued: “The Administration’s latest actions are promising, but it surely’s important to deepen engagement between authorities and innovators over the long run to place and preserve ethics on the heart of AI, deepen and maintain belief over the inevitable pace bumps, and in the end guarantee AI is a power for good. That additionally contains guaranteeing that laws aren’t defusing competitors creating a brand new technology of tech monopolies — and as an alternative invitations the entire AI group to responsibly participate on this societal transformation.”

Associated

[ad_2]

Source link