[ad_1]

Picture by Editor

In as we speak’s fast-paced world, we’re bombarded with extra data than we will deal with. We’re more and more getting used to receiving extra data in much less time, resulting in frustration when having to learn intensive paperwork or books. That is the place extractive summarization steps in. To the guts of a textual content, the method pulls out key sentences from an article, piece, or web page to offer us a snapshot of its most vital factors.

For anybody needing to know large paperwork with out studying each phrase, it is a sport changer.

On this article, we delve into the basics and functions of extractive summarization. We’ll look at the position of Giant Language Fashions, particularly BERT (Bidirectional Encoder Representations from Transformers), in enhancing the method. The article will even embrace a hands-on tutorial on utilizing BERT for extractive summarization, showcasing its practicality in condensing giant textual content volumes into informative summaries.

Extractive summarization is a distinguished approach within the area of pure language processing (NLP) and textual content evaluation. With it, key sentences or phrases are fastidiously chosen from the unique textual content and mixed to create a concise and informative abstract. This entails meticulously sifting by way of textual content to determine essentially the most essential components and central concepts or arguments introduced within the chosen piece.

The place abstractive summarization entails producing totally new sentences typically not current within the supply materials, extractive summarization sticks to the unique textual content. It doesn’t alter or paraphrase however as an alternative extracts the sentences precisely as they seem, sustaining the unique wording and construction. This fashion the abstract stays true to the supply materials’s tone and content material. The strategy of extractive summarization is extraordinarily helpful in cases the place the accuracy of the knowledge and the preservation of the creator’s unique intent are a precedence.

It has many various makes use of, like summarizing information articles, tutorial papers, or prolonged studies. The method successfully conveys the unique content material’s message with out potential biases or reinterpretations that may happen with paraphrasing.

1. Textual content Parsing

This preliminary step entails breaking down the textual content into its important components, primarily sentences, and phrases. The purpose is to determine the fundamental items (sentences, on this context) the algorithm will later consider to incorporate in a abstract, like dissecting a textual content to know its construction and particular person parts.

For example, the mannequin would analyze a four-sentence paragraph by breaking it down into the next four-sentence parts.

- The Pyramids of Giza, inbuilt historical Egypt, stood magnificently for millennia.

- They have been constructed as tombs for pharaohs.

- The Nice Pyramids are essentially the most well-known.

- These constructions symbolize architectural intelligence.

2. Characteristic Extraction

On this stage, the algorithm analyzes every sentence to determine traits or ‘options’ which may point out what their significance is to the general textual content. Widespread options embrace the frequency and repeated use of key phrases and phrases, the size of sentences, their place within the textual content and its implications, and the presence of particular key phrases or phrases which are central to the textual content’s predominant matter.

Under is an instance of how the LLM would do function extraction for the primary sentence “The Pyramids of Giza, inbuilt historical Egypt, stand magnificently for millennia.”

| Attributes | Textual content |

|---|---|

| Frequency | “Pyramids of Giza”, “Historical Egypt”, “Millennia” |

| Sentence Size | Average |

| Place in Textual content | Introductory, units the subject |

| Particular Key phrases | “Pyramids of Giza”, “historical Egypt” |

3. Scoring Sentences

Every sentence is assigned a rating primarily based on its content material. This rating displays a sentence’s perceived significance within the context of the complete textual content. Sentences that rating greater are deemed to hold extra weight or relevance.

Merely put, this course of charges every sentence for its potential significance to a abstract of the complete textual content.

| Sentence Rating | Sentence | Clarification |

|---|---|---|

| 9 | The Pyramids of Giza, inbuilt historical Egypt, stood magnificently for millennia. | It is the introductory sentence that units the subject and context, containing key phrases like “Pyramids of Giza” and “Historical Egypt. |

| 8 | They have been constructed as tombs for pharaohs. | This sentence offers important historic details about the Pyramids and should determine them as important for understanding their objective and significance. |

| 3 | The Nice Pyramids are essentially the most well-known. | Though this sentence provides particular details about the Nice Pyramids, it’s thought-about much less essential within the broader context of summarizing the general significance of the Pyramids. |

| 7 | These constructions symbolize architectural intelligence. | This summarizing assertion captures the overarching significance of the Pyramids. |

4. Choice and Aggregation

The ultimate part entails deciding on the highest-scoring sentences and compiling them right into a abstract. When fastidiously performed, this ensures the abstract stays coherent and an aggregately consultant of the primary concepts and themes of the unique textual content.

To create an efficient abstract, the algorithm should steadiness the necessity to embrace vital sentences which are concise, keep away from redundancy, and be certain that the chosen sentences present a transparent and complete overview of the complete unique textual content.

- The Pyramids of Giza, inbuilt Historical Egypt, stood magnificently for millennia. They have been constructed as tombs for pharaohs. These constructions symbolize architectural brilliance.

This instance is extraordinarily fundamental, extracting 3 of the overall 4 sentences for one of the best total summarization. Studying an additional sentence would not damage, however what occurs when the textual content is longer? As an instance, 3 paragraphs?

Step 1: Putting in and Importing Essential Packages

We can be leveraging the pre-trained BERT mannequin. Nevertheless, we cannot be utilizing simply any BERT mannequin; as an alternative, we’ll give attention to the BERT Extractive Summarizer. This explicit mannequin has been finely tuned for specialised duties in extractive summarization.

!pip set up bert-extractive-summarizer

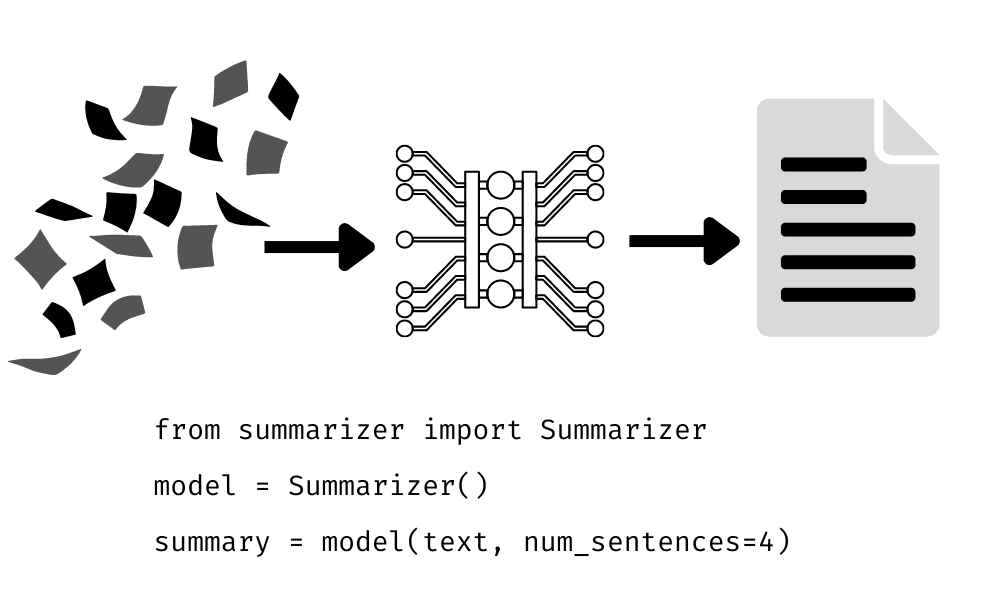

from summarizer import Summarizer

Step 2: Introduce Summarizer Perform

The Summarizer() operate imported from the summarizer in Python is an extractive textual content summarization software. It makes use of the BERT mannequin to research and extract key sentences from a bigger textual content. This operate goals to retain an important data, offering a condensed model of the unique content material. It is generally used to summarize prolonged paperwork effectively.

Step 3: Importing our Textual content

Right here, we’ll import any piece of textual content that we wish to take a look at our mannequin on. To check our extractive abstract mannequin we generated textual content utilizing ChatGPT 3.5 with the immediate: “Present a 3-paragraph abstract of the historical past of GPUs and the way they’re used as we speak.”

textual content = "The historical past of Graphics Processing Items (GPUs) dates again to the early Eighties when corporations like IBM and Texas Devices developed specialised graphics accelerators for rendering pictures and enhancing total graphical efficiency. Nevertheless, it was not till the late Nineties and early 2000s that GPUs gained prominence with the arrival of 3D gaming and multimedia functions. NVIDIA's GeForce 256, launched in 1999, is commonly thought-about the primary GPU, because it built-in each 2D and 3D acceleration on a single chip. ATI (later acquired by AMD) additionally performed a big position within the improvement of GPUs throughout this era. The parallel structure of GPUs, with hundreds of cores, permits them to deal with a number of computations concurrently, making them well-suited for duties that require large parallelism. In the present day, GPUs have developed far past their unique graphics-centric objective, now extensively used for parallel processing duties in varied fields, comparable to scientific simulations, synthetic intelligence, and machine studying. Industries like finance, healthcare, and automotive engineering leverage GPUs for complicated knowledge evaluation, medical imaging, and autonomous car improvement, showcasing their versatility past conventional graphical functions. With developments in know-how, fashionable GPUs proceed to push the boundaries of computational energy, enabling breakthroughs in numerous fields by way of parallel computing. GPUs additionally stay integral to the gaming business, offering immersive and sensible graphics for video video games the place high-performance GPUs improve visible experiences and assist demanding sport graphics. As know-how progresses, GPUs are anticipated to play an much more important position in shaping the way forward for computing."

Step 4: Performing Extractive Summarization

Lastly, we’ll execute our summarization operate. This operate requires two inputs: the textual content to be summarized and the specified variety of sentences for the abstract. After processing, it would generate an extractive abstract, which we’ll then show.

# Specifying the variety of sentences within the abstract

abstract = mannequin(textual content, num_sentences=4)

print(abstract)

Extractive Abstract Output:

The historical past of Graphics Processing Items (GPUs) dates again to the early Eighties when corporations like IBM and Texas Devices developed specialised graphics accelerators for rendering pictures and enhancing total graphical efficiency. NVIDIA’s GeForce 256, launched in 1999, is commonly thought-about the primary GPU, because it built-in each 2D and 3D acceleration on a single chip. In the present day, GPUs have developed far past their unique graphics-centric objective, now extensively used for parallel processing duties in varied fields, comparable to scientific simulations, synthetic intelligence, and machine studying. As know-how progresses, GPUs are anticipated to play an much more important position in shaping the way forward for computing.

Our mannequin pulled the 4 most vital sentences from our giant corpus of textual content to generate this abstract!

- Contextual Understanding Limitations

- Whereas LLMs are proficient in processing and producing language, their understanding of context, particularly in longer texts, is proscribed. LLMs can miss delicate nuances or fail to acknowledge important features of the textual content resulting in much less correct or related summaries. The extra superior the language mannequin the higher the abstract can be.

- Bias in Coaching Information

- LLMs study from huge datasets compiled from varied sources, together with the web. These datasets can include biases, which the fashions would possibly inadvertently study and replicate of their summaries resulting in skewed or unfair representations.

- Dealing with Specialised or Technical Language

- Whereas LLMs are typically educated on a variety of common texts, they could not precisely seize specialised or technical language in fields like regulation, drugs, or different extremely technical fields. This may be alleviated by feeding it extra specialised and technical textual content. Lack of coaching in specialised jargon can have an effect on the standard of summaries when utilized in these fields.

It is clear that extractive summarization is greater than only a useful software; it is a rising necessity in our information-saturated age the place we’re inundated with partitions of textual content on daily basis. By harnessing the facility of applied sciences like BERT, we will see how complicated texts may be distilled into digestible summaries, saving us time and serving to us to additional comprehend the texts being summarized.

Whether or not for educational analysis, enterprise insights, or simply staying knowledgeable in a technologically superior world, extractive summarization is a sensible approach to navigate the ocean of data we’re surrounded by. As pure language processing continues to evolve, instruments like extractive summarization will grow to be much more important, serving to us to shortly discover and perceive the knowledge that issues most in a world the place each minute counts.

Original. Reposted with permission.

Kevin Vu manages Exxact Corp blog and works with lots of its proficient authors who write about completely different features of Deep Studying.

[ad_2]

Source link