[ad_1]

Editor’s observe: This publish is a part of the AI Decoded series, which demystifies AI by making the know-how extra accessible, and which showcases new {hardware}, software program, instruments and accelerations for RTX PC customers.

Skyscrapers begin with robust foundations. The identical goes for apps powered by AI.

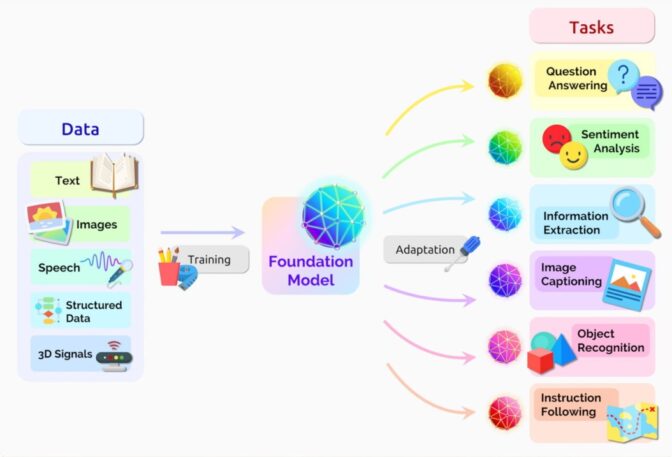

A foundation model is an AI neural community educated on immense quantities of uncooked information, usually with unsupervised learning.

It’s a kind of synthetic intelligence mannequin educated to grasp and generate human-like language. Think about giving a pc an enormous library of books to learn and study from, so it may perceive the context and which means behind phrases and sentences, identical to a human does.

A basis mannequin’s deep data base and talent to speak in pure language make it helpful for a broad vary of functions, together with textual content era and summarization, copilot manufacturing and pc code evaluation, picture and video creation, and audio transcription and speech synthesis.

ChatGPT, probably the most notable generative AI functions, is a chatbot constructed with OpenAI’s GPT basis mannequin. Now in its fourth model, GPT-4 is a big multimodal mannequin that may ingest textual content or pictures and generate textual content or picture responses.

On-line apps constructed on basis fashions usually entry the fashions from an information heart. However many of those fashions, and the functions they energy, can now run domestically on PCs and workstations with NVIDIA GeForce and NVIDIA RTX GPUs.

Basis Mannequin Makes use of

Basis fashions can carry out a wide range of features, together with:

- Language processing: understanding and producing textual content

- Code era: analyzing and debugging pc code in lots of programming languages

- Visible processing: analyzing and producing pictures

- Speech: producing textual content to speech and transcribing speech to textual content

They can be utilized as is or with additional refinement. Slightly than coaching a wholly new AI mannequin for every generative AI utility — a pricey and time-consuming endeavor — customers generally fine-tune basis fashions for specialised use circumstances.

Pretrained basis fashions are remarkably succesful, because of prompts and data-retrieval methods like retrieval-augmented generation, or RAG. Basis fashions additionally excel at transfer learning, which implies they are often educated to carry out a second job associated to their unique objective.

For instance, a general-purpose massive language mannequin (LLM) designed to converse with people will be additional educated to behave as a customer support chatbot able to answering inquiries utilizing a company data base.

Enterprises throughout industries are fine-tuning basis fashions to get one of the best efficiency from their AI functions.

Varieties of Basis Fashions

Greater than 100 basis fashions are in use — a quantity that continues to develop. LLMs and picture mills are the 2 hottest sorts of basis fashions. And plenty of of them are free for anybody to attempt — on any {hardware} — within the NVIDIA API Catalog.

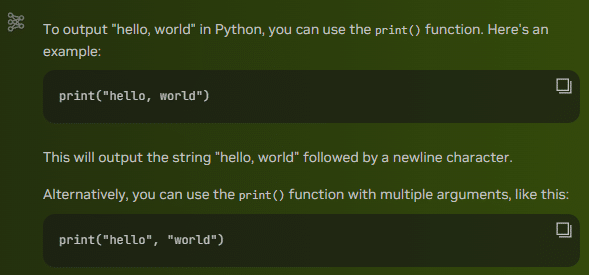

LLMs are fashions that perceive pure language and might reply to queries. Google’s Gemma is one instance; it excels at textual content comprehension, transformation and code era. When requested in regards to the astronomer Cornelius Gemma, it shared that his “contributions to celestial navigation and astronomy considerably impacted scientific progress.” It additionally offered data on his key achievements, legacy and different details.

Extending the collaboration of the Gemma models, accelerated with the NVIDIA TensorRT-LLM on RTX GPUs, Google’s CodeGemma brings highly effective but light-weight coding capabilities to the group. CodeGemma fashions can be found as 7B and 2B pretrained variants focusing on code completion and code era duties.

MistralAI’s Mistral LLM can comply with directions, full requests and generate inventive textual content. Actually, it helped brainstorm the headline for this weblog, together with the requirement that it use a variation of the collection’ identify “AI Decoded,” and it assisted in writing the definition of a basis mannequin.

Meta’s Llama 2 is a cutting-edge LLM that generates textual content and code in response to prompts.

Mistral and Llama 2 can be found within the NVIDIA ChatRTX tech demo, working on RTX PCs and workstations. ChatRTX lets customers personalize these basis fashions by connecting them to private content material — resembling paperwork, medical doctors’ notes and different information — by way of RAG. It’s accelerated by TensorRT-LLM for fast, contextually related solutions. And since it runs domestically, outcomes are quick and safe.

Picture mills like StabilityAI’s Stable Diffusion XL and SDXL Turbo let customers generate pictures and gorgeous, reasonable visuals. StabilityAI’s video generator, Stable Video Diffusion, makes use of a generative diffusion mannequin to synthesize video sequences with a single picture as a conditioning body.

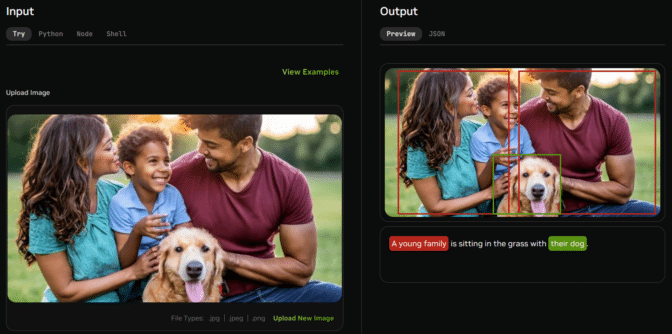

Multimodal basis fashions can concurrently course of a couple of kind of knowledge — resembling textual content and pictures — to generate extra refined outputs.

A multimodal mannequin that works with each textual content and pictures might let customers add a picture and ask questions on it. All these fashions are rapidly working their means into real-world functions like customer support, the place they’ll function sooner, extra user-friendly variations of conventional manuals.

Kosmos 2 is Microsoft’s groundbreaking multimodal mannequin designed to grasp and purpose about visible parts in pictures.

Suppose Globally, Run AI Fashions Domestically

GeForce RTX and NVIDIA RTX GPUs can run basis fashions domestically.

The outcomes are quick and safe. Slightly than counting on cloud-based providers, customers can harness apps like ChatRTX to course of delicate information on their native PC with out sharing the info with a 3rd get together or needing an web connection.

Customers can select from a quickly rising catalog of open basis fashions to obtain and run on their very own {hardware}. This lowers prices in contrast with utilizing cloud-based apps and APIs, and it eliminates latency and community connectivity points. Generative AI is remodeling gaming, videoconferencing and interactive experiences of every kind. Make sense of what’s new and what’s subsequent by subscribing to the AI Decoded newsletter.

[ad_2]

Source link