[ad_1]

Picture from Adobe Firefly

“There have been too many people. We had entry to an excessive amount of cash, an excessive amount of tools, and little by little, we went insane.”

Francis Ford Coppola wasn’t making a metaphor for AI corporations that spend an excessive amount of and lose their approach, however he might have been. Apocalypse Now was epic but additionally an extended, troublesome and costly venture to make, very similar to GPT-4. I’d counsel that the event of LLMs has gravitated to an excessive amount of cash and an excessive amount of tools. And among the “we simply invented common intelligence” hype is somewhat insane. However now it’s the flip of open supply communities to do what they do greatest: delivering free competing software program utilizing far much less cash and tools.

OpenAI has taken over $11Bn in funding and it’s estimated GPT-3.5 prices $5-$6m per coaching run. We all know little or no about GPT-4 as a result of OpenAI isn’t telling, however I feel it’s secure to imagine that it isn’t smaller than GPT-3.5. There’s at present a world-wide GPU scarcity and – for a change – it’s not due to the newest cryptocoin. Generative AI start-ups are touchdown $100m+ Sequence A rounds at large valuations after they don’t personal any of the IP for the LLM they use to energy their product. The LLM bandwagon is in excessive gear and the cash is flowing.

It had regarded just like the die was forged: solely deep-pocketed corporations like Microsoft/OpenAI, Amazon, and Google might afford to coach hundred-billion parameter fashions. Larger fashions had been assumed to be higher fashions. GPT-3 bought one thing unsuitable? Simply wait till there is a larger model and it’ll all be high quality! Smaller corporations trying to compete needed to increase much more capital or be left constructing commodity integrations within the ChatGPT market. Academia, with much more constrained analysis budgets, was relegated to the sidelines.

Luckily, a bunch of sensible folks and open supply tasks took this as a problem quite than a restriction. Researchers at Stanford launched Alpaca, a 7-billion parameter mannequin whose efficiency comes near GPT-3.5’s 175-Billion parameter mannequin. Missing the assets to construct a coaching set of the dimensions utilized by OpenAI, they cleverly selected to take a skilled open supply LLM, LLaMA, and fine-tune it on a sequence of GPT-3.5 prompts and outputs as a substitute. Primarily the mannequin discovered what GPT-3.5 does, which seems to be a really efficient technique for replicating its conduct.

Alpaca is licensed for non-commercial use solely in each code and information because it makes use of the open supply non-commercial LLaMA mannequin, and OpenAI explicitly disallows any use of its APIs to create competing merchandise. That does create the tantalizing prospect of fine-tuning a special open supply LLM on the prompts and output of Alpaca… creating a 3rd GPT-3.5-like mannequin with completely different licensing potentialities.

There’s one other layer of irony right here, in that all the main LLMs had been skilled on copyrighted textual content and pictures accessible on the Web they usually didn’t pay a penny to the rights holders. The businesses declare the “honest use” exemption beneath US copyright regulation with the argument that the use is “transformative”. Nonetheless, with regards to the output of the fashions they construct with free information, they actually don’t need anybody to do the identical factor to them. I count on it will change as rights-holders smart up, and should find yourself in court docket sooner or later.

It is a separate and distinct level to that raised by authors of restrictive-licensed open supply who, for generative AI for Code merchandise like CoPilot, object to their code getting used for coaching on the grounds that the license isn’t being adopted. The issue for particular person open-source authors is that they should present standing – substantive copying – and that they’ve incurred damages. And for the reason that fashions make it onerous to hyperlink output code to enter (the strains of supply code by the writer) and there’s no financial loss (it’s purported to be free), it’s far tougher to make a case. That is not like for-profit creators (e.g, photographers) whose whole enterprise mannequin is in licensing/promoting their work, and who’re represented by aggregators like Getty Photos who can present substantive copying.

One other fascinating factor about LLaMA is that it got here out of Meta. It was initially launched simply to researchers after which leaked through BitTorrent to the world. Meta is in a essentially completely different enterprise to OpenAI, Microsoft, Google, and Amazon in that it isn’t attempting to promote you cloud providers or software program, and so has very completely different incentives. It has open-sourced its compute designs up to now (OpenCompute) and seen the neighborhood enhance on them – it understands the worth of open supply.

Meta might change into one of the essential open-source AI contributors. Not solely does it have large assets, however it advantages if there’s a proliferation of nice generative AI know-how: there will likely be extra content material for it to monetize on social media. Meta has launched three different open-source AI fashions: ImageBind (multi-dimensional information indexing), DINOv2 (pc imaginative and prescient) and Section Something. The latter identifies distinctive objects in photographs and is launched beneath the extremely permissive Apache License.

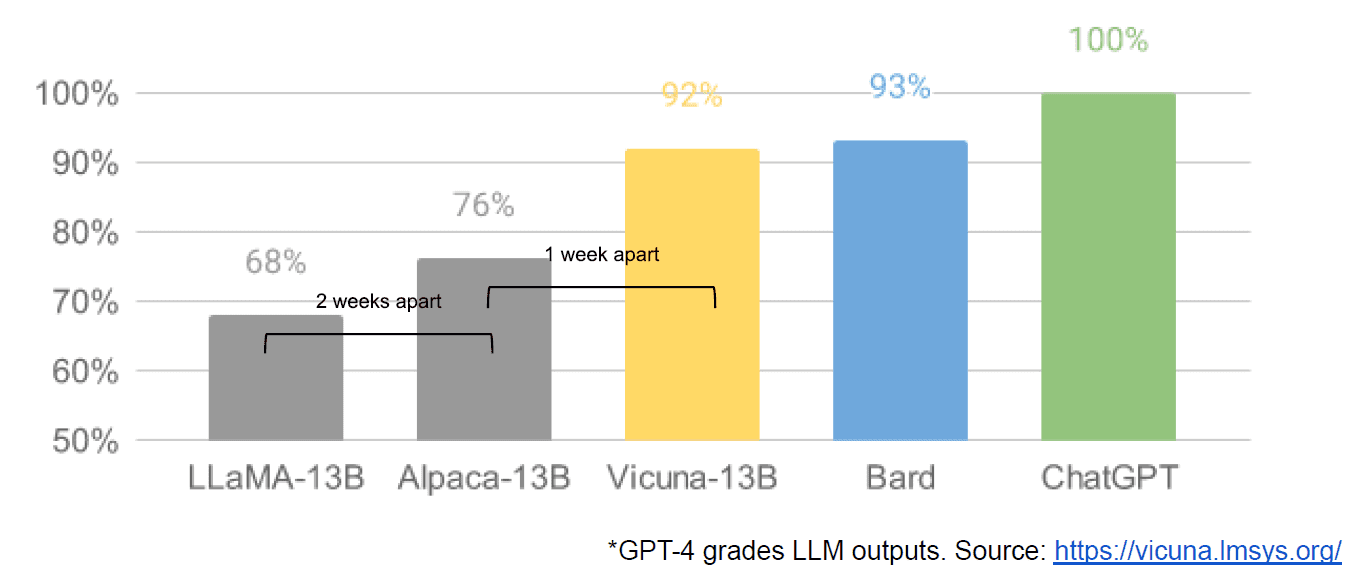

Lastly we additionally had the alleged leaking of an inside Google doc “We Have No Moat, and Neither Does OpenAI” which takes a dim view of closed fashions vs. the innovation of communities producing far smaller, cheaper fashions that carry out near or higher than their closed supply counterparts. I say allegedly as a result of there isn’t a approach to confirm the supply of the article as being Google inside. Nonetheless, it does include this compelling graph:

The vertical axis is the grading of the LLM outputs by GPT-4, to be clear.

Steady Diffusion, which synthesizes photographs from textual content, is one other instance of the place open supply generative AI has been capable of advance sooner than proprietary fashions. A latest iteration of that venture (ControlNet) has improved it such that it has surpassed Dall-E2’s capabilities. This happened from a complete lot of tinkering everywhere in the world, leading to a tempo of advance that’s onerous for any single establishment to match. A few of these tinkerers found out how you can make Steady Diffusion sooner to coach and run on cheaper {hardware}, enabling shorter iteration cycles by extra folks.

And so we have now come full circle. Not having an excessive amount of cash and an excessive amount of tools has impressed a crafty degree of innovation by a complete neighborhood of extraordinary folks. What a time to be an AI developer.

Mathew Lodge is CEO of Diffblue, an AI For Code startup. He has 25+ years’ numerous expertise in product management at corporations resembling Anaconda and VMware. Lodge is at present serves on the board of the Good Regulation Challenge and is Deputy Chair of the Board of Trustees of the Royal Photographic Society.

[ad_2]

Source link