[ad_1]

Subjects

Accountable AI

The Accountable AI initiative appears to be like at how organizations outline and method accountable AI practices, insurance policies, and requirements. Drawing on world govt surveys and smaller, curated knowledgeable panels, this system gathers views from various sectors and geographies with the intention of delivering actionable insights on this nascent but essential focus space for leaders throughout business.

More in this series

For the second yr in a row, MIT Sloan Administration Assessment and Boston Consulting Group have assembled a global panel of AI specialists to assist us perceive how accountable synthetic intelligence (RAI) is being carried out throughout organizations worldwide. For our last query on this yr’s analysis cycle, we requested our educational and practitioner panelists to answer this provocation: Because the enterprise neighborhood turns into extra conscious of AI’s dangers, corporations are making ample investments in RAI.

Whereas their causes fluctuate, most panelists acknowledge that RAI investments are falling wanting what’s wanted: Eleven out of 13 have been reluctant to agree that organizations’ investments in accountable AI are “ample.” The panelists largely affirmed findings from our 2023 RAI global survey, through which lower than half of respondents stated they consider their firm is ready to make ample investments in RAI. This can be a urgent management problem for corporations which can be prioritizing AI and should handle AI-related dangers.

The necessity to develop investments in RAI marks a big shift now that “AI-related dangers are not possible to disregard,” observes Linda Leopold, head of accountable AI and information at H&M Group. Whereas making ample investments in RAI was an essential however not notably pressing challenge, Leopold means that latest advances in generative AI and the proliferation of third-party instruments have made the urgency of such investments clear.

Making ample investments in RAI is difficult by measurement challenges. There aren’t any business requirements that supply steering, so how are you aware when or in case your investments are adequate? What’s extra, rational executives can have completely different opinions about what constitutes an ample funding. What’s clear is that epistemic questions shouldn’t be a barrier to motion, particularly when AI dangers pose actual enterprise threats.

The Panelists Reply

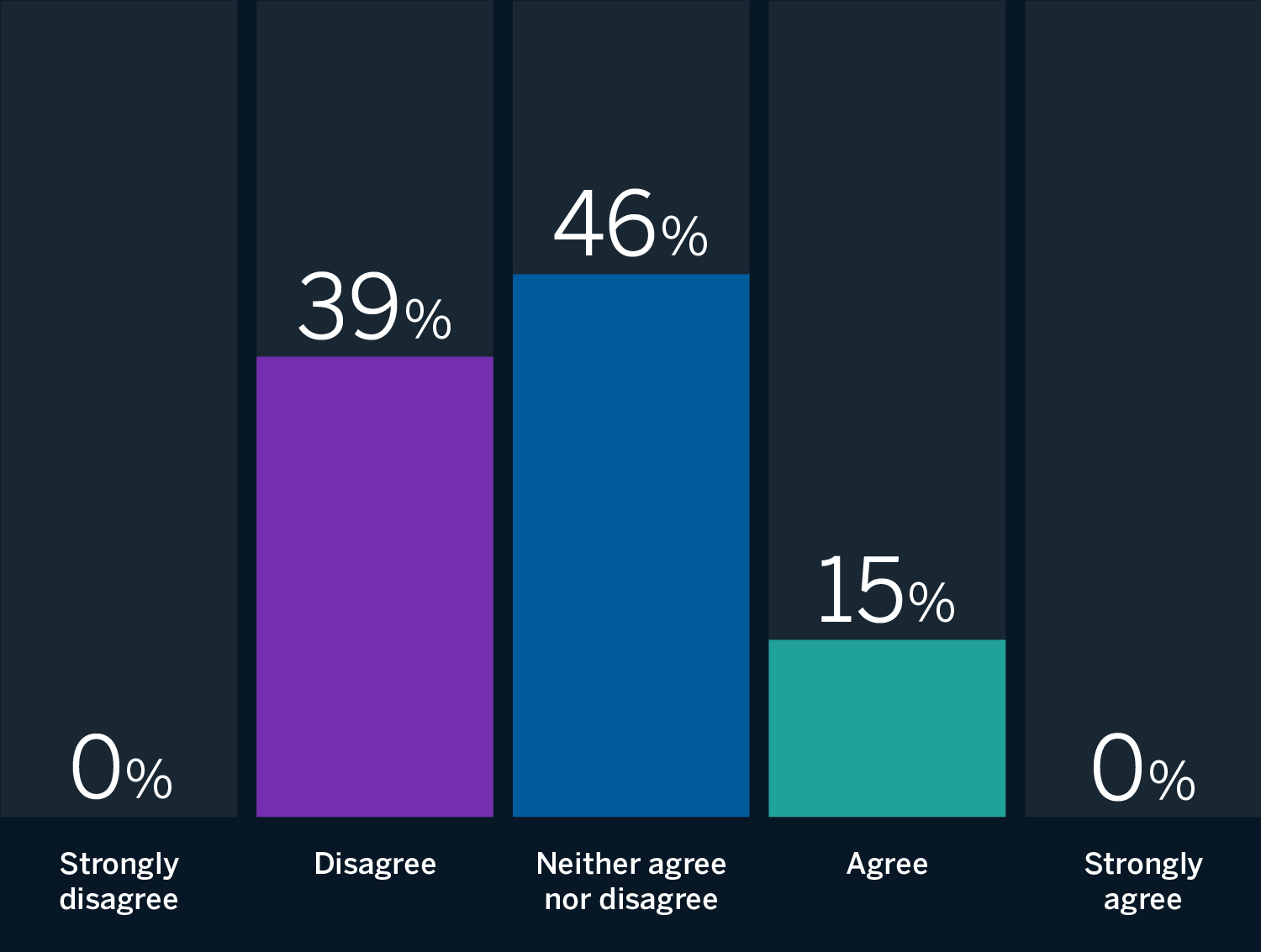

It’s unclear if organizations are making applicable investments in RAI.

Most panelists are reluctant to agree that organizations are making “ample” investments in RAI.

Supply: Accountable AI panel of 13 specialists in synthetic intelligence technique.

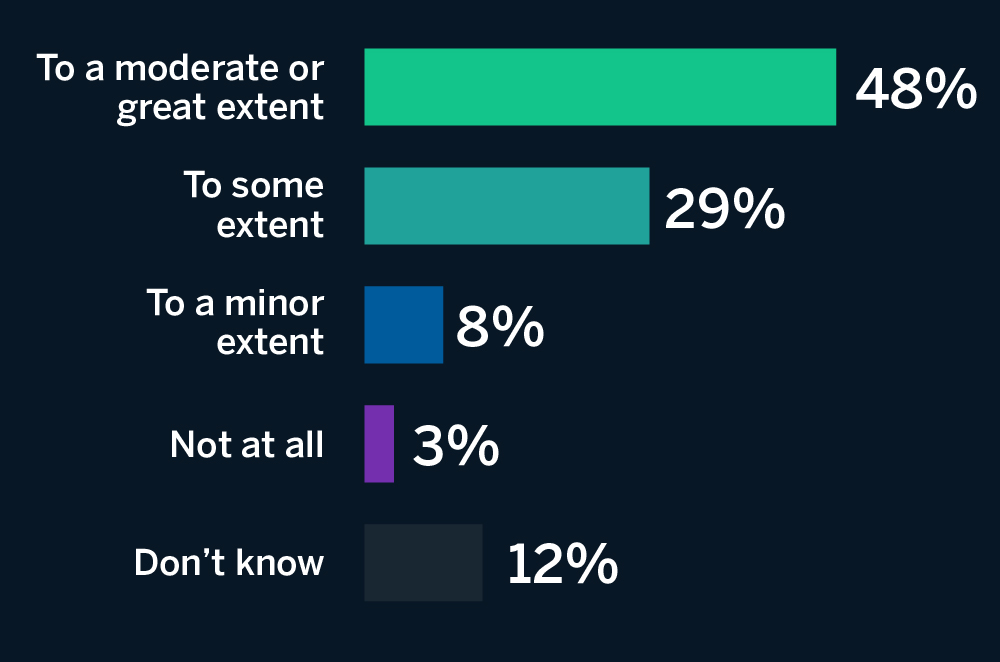

Responses from the 2023 International Government Survey

“To what extent do you consider that your group is ready to speculate (scale back income, improve prices) to adjust to accountable AI initiatives?”

Supply: Survey information of 1,240 respondents representing organizations reporting no less than $100 million in annual revenues. These respondents represented corporations in 59 industries and 87 nations. The survey fielded in China was localized.

Firms Are Investing in RAI, however Not Sufficient

Panelists supplied a number of the reason why corporations aren’t making ample investments in accountable AI. A number of panelists instructed that revenue motives are an element. Tshilidzi Marwala, rector of the United Nations College and U.N. undersecretary basic, contends that solely “20% of the businesses conscious of AI dangers are investing in RAI applications. The remaining are extra involved about how they’ll use AI to maximise their earnings.” Simon Chesterman, the David Marshall Professor and vice provost on the Nationwide College of Singapore, provides, “The gold rush round generative AI has led to a downsizing of security and safety groups in tech corporations, and a shortened path to marketplace for new merchandise. … Concern of lacking out is triumphing — in lots of organizations, if not all — over threat administration.”

One more reason behind underinvestment is that better use of AI all through an enterprise expands the scope of RAI and will increase required investments. As Philip Dawson, head of AI coverage at Armilla AI, observes, “Funding in AI capabilities continues to vastly outstrip funding in AI security and instruments to operationalize AI threat administration. Enterprises are taking part in catch-up in an uphill battle that’s solely getting steeper.” Leopold provides that generative AI and an growing variety of doable purposes “considerably will increase the scale of the viewers that accountable AI applications have to achieve.”

Neither agree nor disagree

A 3rd cause is that rising consciousness of AI dangers doesn’t at all times translate into correct assessments of these dangers. Oarabile Mudongo, a coverage specialist on the African Observatory on Accountable AI, means that some corporations may not be investing sufficient of their RAI applications as a result of they “underestimate the extent of AI dangers.”

How Do You Know Whether or not You’re Investing Sufficient?

Different panelists identified that though making ample investments in RAI is essential, it’s arduous to gauge whether or not the funding is massive sufficient or efficient. How ought to corporations calculate a return on RAI investments? What counts as an “ample” return? What even counts as an funding? David R. Hardoon, CEO of Aboitiz Knowledge Innovation and chief information and AI officer of Union Financial institution of the Philippines, asks, “How can the investments in RAI be broadly, and customarily, thought of ample if (1) all corporations are usually not subjected to the identical ranges of requirements, (2) we have now but to agree on what’s ‘adequate’ or ‘acceptable,’ or (3) we don’t have a mechanism to confirm third events?”

Triveni Gandhi, accountable AI lead at Dataiku, agrees: “Amongst these corporations which have taken the time to spend money on RAI applications, there’s extensive variation in how these applications are literally designed and carried out. The shortage of cohesive or clear expectations on easy methods to implement or operationalize RAI values makes it troublesome for organizations to begin investing effectively.”

“It’s troublesome to find out the extent to which corporations are making ample investments in accountable AI applications, as there’s a massive spectrum of consciousness and relevance,” asserts Giuseppe Manai, cofounder, chief working officer, and chief scientist at Stemly. Aisha Naseer, director of analysis at Huawei Applied sciences (UK), provides, “It isn’t evident whether or not these investments are ample or sufficient, as these vary from trivial to very large funds being allotted for the management of AI and related threat mitigation.”

Neither agree nor disagree

Suggestions

For organizations looking for to adequately spend money on RAI, we suggest the next:

1. Construct management consciousness of AI dangers. Leaders must be conscious that utilizing AI is a double-edged sword that introduces dangers in addition to advantages. Specializing in investments that improve the advantages of AI with out a commensurate deal with managing its dangers undermines worth creation in two methods. One is that with out applicable guardrails, the advantages from AI could be underwhelming: Our analysis has discovered that innovation with AI will increase when RAI practices are in place. Second, dangers from AI are proliferating as rapidly because the instruments they’re embedded in. A strong consciousness of AI’s dangers and advantages helps management efforts to handle dangers and develop the corporate. Constructing administration consciousness can take many kinds, together with govt training, dialogue boards, and RAI applications which have a wide range of stakeholders with several types of publicity to (and expertise with) AI dangers.

2. Settle for that investing in RAI shall be an ongoing course of. As AI growth and use improve — with no foreseeable finish — RAI practices and implementations have to adapt as properly. That requires ongoing RAI funding to repeatedly evolve an RAI program. With the regulatory panorama poised for change within the European Union, the U.S., and Asia, leaders will face rising stress to evolve their AI-focused threat administration methods. Ready to speculate (or halting funding) in these methods — technologically, culturally, and financially — can undermine enterprise progress. When new laws and business requirements take impact, quickly overhauling processes to fulfill compliance necessities can intervene with AI-related innovation. It’s higher to co-evolve RAI applications with AI growth in order that they complement one another reasonably than create this pointless interference. As corporations scale their AI investments, so, too, will their RAI investments have to develop. However these corporations which can be already behind might want to transfer rapidly and make investments aggressively in RAI to keep away from at all times lagging behind.

3. Develop RAI funding metrics. Investing in RAI is complicated — and what does a return on such an funding even appear to be? Very similar to with cybersecurity investments, the perfect RAI returns could be the sound of silence when AI-related points are found earlier than they create injury to model status, regulatory points, and elevated prices. That stated, silence shouldn’t be mistaken for ample funding. As our survey outcomes present, corporations making minimal funding may not discover points just because they aren’t on the lookout for them. Leaders ought to align on what constitutes an RAI funding, the suitable metrics to judge these investments, and who will determine on funding ranges. Metrics that gauge RAI program success are essential to help RAI applications over time, however they could be a lagging indicator. Metrics also needs to consider forward-looking dimensions comparable to management dedication, program adoption, coaching and workforce wants, and tradition change. Leaders should additionally decide how RAI funding is expounded to funding for AI tasks. Ought to each massive AI challenge have a finances put aside for RAI? Or ought to RAI funding be impartial of particular tasks or some mixture of project-based (decentralized) and institutional (centralized) investments?

[ad_2]

Source link