[ad_1]

Again in 2018, BERT bought folks speaking about how machine learning models have been studying to learn and converse. At present, large language models, or LLMs, are rising up quick, exhibiting dexterity in all types of purposes.

They’re, for one, dashing drug discovery, due to research from the Rostlab at Technical College of Munich, in addition to work by a workforce from Harvard, Yale and New York College and others. In separate efforts, they utilized LLMs to interpret the strings of amino acids that make up proteins, advancing our understanding of those constructing blocks of biology.

It’s one in every of many inroads LLMs are making in healthcare, robotics and different fields.

A Transient Historical past of LLMs

Transformer models — neural networks, outlined in 2017, that may study context in sequential information — bought LLMs began.

Researchers behind BERT and different transformer fashions made 2018 “a watershed second” for pure language processing, a report on AI mentioned on the finish of that 12 months. “Fairly a number of specialists have claimed that the discharge of BERT marks a brand new period in NLP,” it added.

Developed by Google, BERT (aka Bidirectional Encoder Representations from Transformers) delivered state-of-the-art scores on benchmarks for NLP. In 2019, it announced BERT powers the corporate’s search engine.

Google launched BERT as open-source software, spawning a household of follow-ons and setting off a race to construct ever bigger, extra highly effective LLMs.

As an example, Meta created an enhanced model referred to as RoBERTa, launched as open-source code in July 2017. For coaching, it used “an order of magnitude extra information than BERT,” the paper mentioned, and leapt forward on NLP leaderboards. A scrum adopted.

Scaling Parameters and Markets

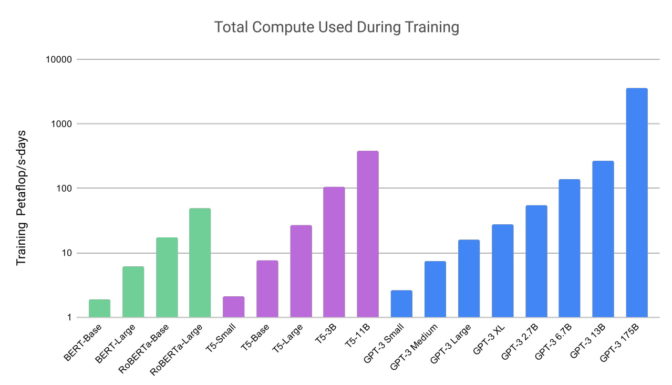

For comfort, rating is commonly stored by the variety of an LLM’s parameters or weights, measures of the energy of a connection between two nodes in a neural community. BERT had 110 million, RoBERTa had 123 million, then BERT-Giant weighed in at 354 million, setting a brand new file, however not for lengthy.

In 2020, researchers at OpenAI and Johns Hopkins College introduced GPT-3, with a whopping 175 billion parameters, educated on a dataset with almost a trillion phrases. It scored effectively on a slew of language duties and even ciphered three-digit arithmetic.

“Language fashions have a variety of useful purposes for society,” the researchers wrote.

Consultants Really feel ‘Blown Away’

Inside weeks, folks have been utilizing GPT-3 to create poems, packages, songs, web sites and extra. Just lately, GPT-3 even wrote an academic paper about itself.

“I simply keep in mind being form of blown away by the issues that it may do, for being only a language mannequin,” mentioned Percy Liang, a Stanford affiliate professor of pc science, talking in a podcast.

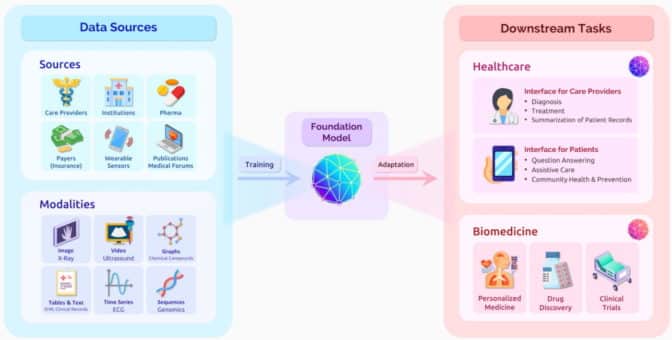

GPT-3 helped encourage Stanford to create a center Liang now leads, exploring the implications of what it calls foundational fashions that may deal with all kinds of duties effectively.

Towards Trillions of Parameters

Final 12 months, NVIDIA announced the Megatron 530B LLM that may be educated for brand spanking new domains and languages. It debuted with instruments and companies for coaching language fashions with trillions of parameters.

“Giant language fashions have confirmed to be versatile and succesful … capable of reply deep area questions with out specialised coaching or supervision,” Bryan Catanzaro, vp of utilized deep studying analysis at NVIDIA, mentioned at the moment.

Making it even simpler for customers to undertake the highly effective fashions, the NVIDIA Nemo LLM service debuted in September at GTC. It’s an NVIDIA-managed cloud service to adapt pretrained LLMs to carry out particular duties.

Transformers Rework Drug Discovery

The advances LLMs are making with proteins and chemical constructions are additionally being utilized to DNA.

Researchers purpose to scale their work with NVIDIA BioNeMo, a software program framework and cloud service to generate, predict and perceive biomolecular information. A part of the NVIDIA Clara Discovery assortment of frameworks, purposes and AI fashions for drug discovery, it helps work in extensively used protein, DNA and chemistry information codecs.

NVIDIA BioNeMo options a number of pretrained AI models, together with the MegaMolBART mannequin, developed by NVIDIA and AstraZeneca.

LLMs Improve Pc Imaginative and prescient

Transformers are additionally reshaping pc imaginative and prescient as highly effective LLMs substitute conventional convolutional AI fashions. For instance, researchers at Meta AI and Dartmouth designed TimeSformer, an AI mannequin that makes use of transformers to research video with state-of-the-art outcomes.

Consultants predict such fashions may spawn all types of recent purposes in computational pictures, training and interactive experiences for cell customers.

In associated work earlier this 12 months, two firms launched highly effective AI fashions to generate photographs from textual content.

OpenAI introduced DALL-E 2, a transformer mannequin with 3.5 billion parameters designed to create lifelike photographs from textual content descriptions. And not too long ago, Stability AI, based mostly in London, launched Stability Diffusion,

Writing Code, Controlling Robots

LLMs additionally assist builders write software program. Tabnine — a member of NVIDIA Inception, a program that nurtures cutting-edge startups — claims it’s automating as much as 30% of the code generated by one million builders.

Taking the subsequent step, researchers are utilizing transformer-based fashions to show robots utilized in manufacturing, building, autonomous driving and private assistants.

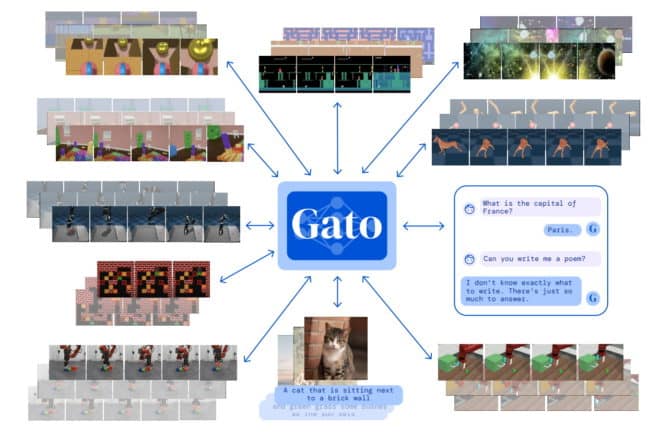

For instance, DeepMind developed Gato, an LLM that taught a robotic arm find out how to stack blocks. The 1.2-billion parameter mannequin was educated on greater than 600 distinct duties so it may very well be helpful in a wide range of modes and environments, whether or not enjoying video games or animating chatbots.

“By scaling up and iterating on this similar primary method, we will construct a helpful general-purpose agent,” researchers mentioned in a paper posted in Could.

It’s one other instance of what the Stanford middle in a July paper referred to as a paradigm shift in AI. “Basis fashions have solely simply begun to rework the best way AI techniques are constructed and deployed on this planet,” it mentioned.

Find out how firms around the globe are implementing LLMs with NVIDIA Triton for a lot of use circumstances.

[ad_2]

Source link