[ad_1]

Picture by frimufilms on Freepik

That is an period the place AI breakthrough is coming each day. We didn’t have many AI-generated in public just a few years in the past, however now the expertise is accessible to everybody. It’s glorious for a lot of particular person creators or corporations that need to considerably make the most of the expertise to develop one thing advanced, which could take a very long time.

One of the crucial unimaginable breakthroughs that change how we work is the discharge of the GPT-3.5 model by OpenAI. What’s the GPT-3.5 mannequin? If I let the mannequin discuss for themselves. In that case, the reply is “a extremely superior AI mannequin within the subject of pure language processing, with huge enhancements in producing contextually correct and related text”.

OpenAI supplies an API for the GPT-3.5 mannequin that we are able to use to develop a easy app, akin to a textual content summarizer. To do this, we are able to use Python to combine the mannequin API into our supposed utility seamlessly. What does the method seem like? Let’s get into it.

There are just a few conditions earlier than following this tutorial, together with:

– Information of Python, together with data of utilizing exterior libraries and IDE

– Understanding of APIs and dealing with the endpoint with Python

– Gaining access to the OpenAI APIs

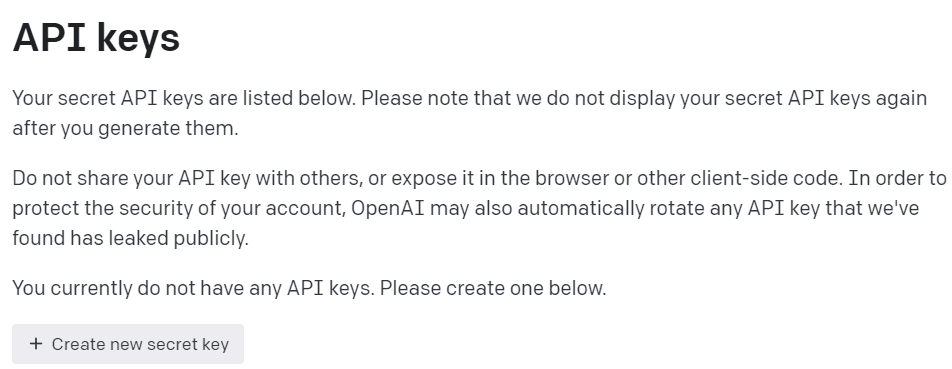

To acquire OpenAI APIs entry, we should register on the OpenAI Developer Platform and go to the View API keys inside your profile. On the net, click on the “Create new secret key” button to amass API entry (See picture beneath). Keep in mind to avoid wasting the keys, as they won’t be proven the keys after that.

Picture by Creator

With all of the preparation prepared, let’s attempt to perceive the fundamental of the OpenAI APIs mannequin.

The GPT-3.5 family model was specified for a lot of language duties, and every mannequin within the household excels in some duties. For this tutorial instance, we’d use the gpt-3.5-turbo because it was the advisable present mannequin when this text was written for its functionality and cost-efficiency.

We frequently use the text-davinci-003 within the OpenAI tutorial, however we’d use the present mannequin for this tutorial. We’d depend on the ChatCompletion endpoint as an alternative of Completion as a result of the present advisable mannequin is a chat mannequin. Even when the title was a chat mannequin, it really works for any language process.

Let’s attempt to perceive how the API works. First, we have to set up the present OpenAI packages.

After now we have completed putting in the bundle, we’ll attempt to use the API by connecting by way of the ChatCompletion endpoint. Nevertheless, we have to set the atmosphere earlier than we proceed.

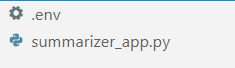

In your favourite IDE (for me, it’s VS Code), create two information referred to as .env and summarizer_app.py, much like the picture beneath.

Picture by Creator

The summarizer_app.py is the place we’d construct our easy summarizer utility, and the .env file is the place we’d retailer our API Key. For safety causes, it’s all the time suggested to separate our API key in one other file fairly than hard-code them within the Python file.

Within the .env file put the next syntax and save the file. Change your_api_key_here together with your precise API key. Don’t change the API key right into a string object; allow them to as it’s.

OPENAI_API_KEY=your_api_key_here

To grasp the GPT-3.5 API higher; we’d use the next code to generate the phrase summarizer.

openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

max_tokens=100,

temperature=0.7,

top_p=0.5,

frequency_penalty=0.5,

messages=[

{

"role": "system",

"content": "You are a helpful assistant for text summarization.",

},

{

"role": "user",

"content": f"Summarize this for a {person_type}: {prompt}",

},

],

)

The above code is how we work together with the OpenAI APIs GPT-3.5 mannequin. Utilizing the ChatCompletion API, we create a dialog and can get the supposed outcome after passing the immediate.

Let’s break down every half to know them higher. Within the first line, we use the openai.ChatCompletion.create code to create the response from the immediate we’d go into the API.

Within the subsequent line, now we have our hyperparameters that we use to enhance our textual content duties. Right here is the abstract of every hyperparameter operate:

mannequin: The mannequin household we need to use. On this tutorial, we use the present advisable mannequin (gpt-3.5-turbo).max_tokens: The higher restrict of the generated phrases by the mannequin. It helps to restrict the size of the generated textual content.temperature: The randomness of the mannequin output, with the next temperature, means a extra various and artistic outcome. The worth vary is between 0 to infinity, though values greater than 2 are usually not frequent.top_p: Prime P or top-k sampling or nucleus sampling is a parameter to manage the sampling pool from the output distribution. For instance, worth 0.1 means the mannequin solely samples the output from the highest 10% of the distribution. The worth vary was between 0 and 1; larger values imply a extra various outcome.frequency_penalty: The penalty for the repetition token from the output. The worth vary between -2 to 2, the place constructive values would suppress the mannequin from repeating token whereas adverse values encourage the mannequin to make use of extra repetitive phrases. 0 means no penalty.messages: The parameter the place we go our textual content immediate to be processed with the mannequin. We go an inventory of dictionaries the place the hot button is the position object (both “system”, “person”, or “assistant”) that helps the mannequin to know the context and construction whereas the values are the context.- The position “system” is the set tips for the mannequin “assistant” conduct,

- The position “person” represents the immediate from the particular person interacting with the mannequin,

- The position “assistant” is the response to the “person” immediate

Having defined the parameter above, we are able to see that the messages parameter above has two dictionary object. The primary dictionary is how we set the mannequin as a textual content summarizer. The second is the place we’d go our textual content and get the summarization output.

Within the second dictionary, additionally, you will see the variable person_type and immediate. The person_type is a variable I used to manage the summarized type, which I’ll present within the tutorial. Whereas the immediate is the place we’d go our textual content to be summarized.

Persevering with with the tutorial, place the beneath code within the summarizer_app.py file and we’ll attempt to run by way of how the operate beneath works.

import openai

import os

from dotenv import load_dotenv

load_dotenv()

openai.api_key = os.getenv("OPENAI_API_KEY")

def generate_summarizer(

max_tokens,

temperature,

top_p,

frequency_penalty,

immediate,

person_type,

):

res = openai.ChatCompletion.create(

mannequin="gpt-3.5-turbo",

max_tokens=100,

temperature=0.7,

top_p=0.5,

frequency_penalty=0.5,

messages=

[

{

"role": "system",

"content": "You are a helpful assistant for text summarization.",

},

{

"role": "user",

"content": f"Summarize this for a {person_type}: {prompt}",

},

],

)

return res["choices"][0]["message"]["content"]

The code above is the place we create a Python operate that might settle for numerous parameters that now we have mentioned beforehand and return the textual content abstract output.

Strive the operate above together with your parameter and see the output. Then let’s proceed the tutorial to create a easy utility with the streamlit bundle.

Streamlit is an open-source Python bundle designed for creating machine studying and knowledge science internet apps. It’s simple to make use of and intuitive, so it is suggested for a lot of inexperienced persons.

Let’s set up the streamlit bundle earlier than we proceed with the tutorial.

After the set up is completed, put the next code into the summarizer_app.py.

import streamlit as st

#Set the appliance title

st.title("GPT-3.5 Textual content Summarizer")

#Present the enter space for textual content to be summarized

input_text = st.text_area("Enter the textual content you need to summarize:", top=200)

#Provoke three columns for part to be side-by-side

col1, col2, col3 = st.columns(3)

#Slider to manage the mannequin hyperparameter

with col1:

token = st.slider("Token", min_value=0.0, max_value=200.0, worth=50.0, step=1.0)

temp = st.slider("Temperature", min_value=0.0, max_value=1.0, worth=0.0, step=0.01)

top_p = st.slider("Nucleus Sampling", min_value=0.0, max_value=1.0, worth=0.5, step=0.01)

f_pen = st.slider("Frequency Penalty", min_value=-1.0, max_value=1.0, worth=0.0, step=0.01)

#Choice field to pick the summarization type

with col2:

choice = st.selectbox(

"How do you wish to be defined?",

(

"Second-Grader",

"Skilled Information Scientist",

"Housewives",

"Retired",

"College Pupil",

),

)

#Exhibiting the present parameter used for the mannequin

with col3:

with st.expander("Present Parameter"):

st.write("Present Token :", token)

st.write("Present Temperature :", temp)

st.write("Present Nucleus Sampling :", top_p)

st.write("Present Frequency Penalty :", f_pen)

#Creating button for execute the textual content summarization

if st.button("Summarize"):

st.write(generate_summarizer(token, temp, top_p, f_pen, input_text, choice))

Attempt to run the next code in your command immediate to provoke the appliance.

streamlit run summarizer_app.py

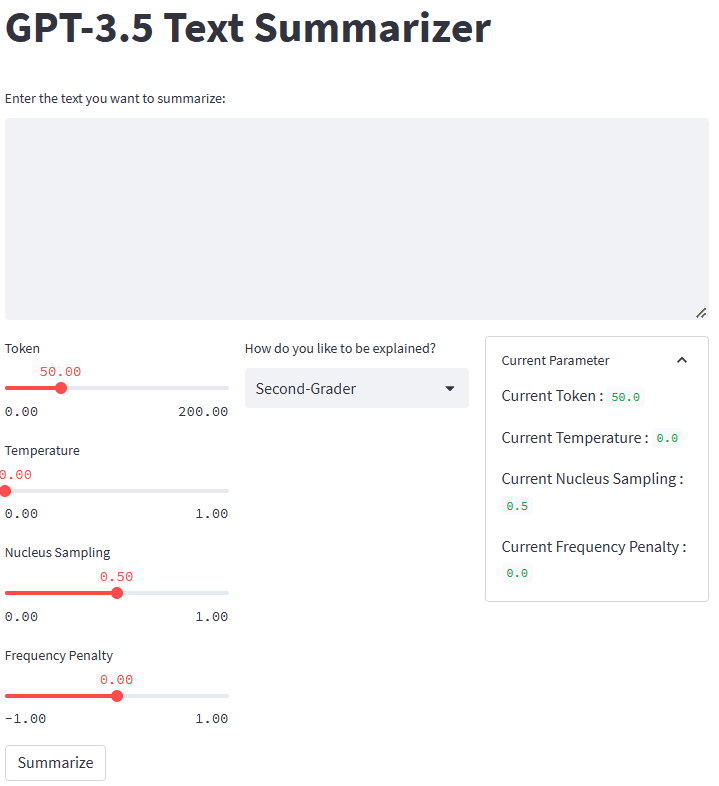

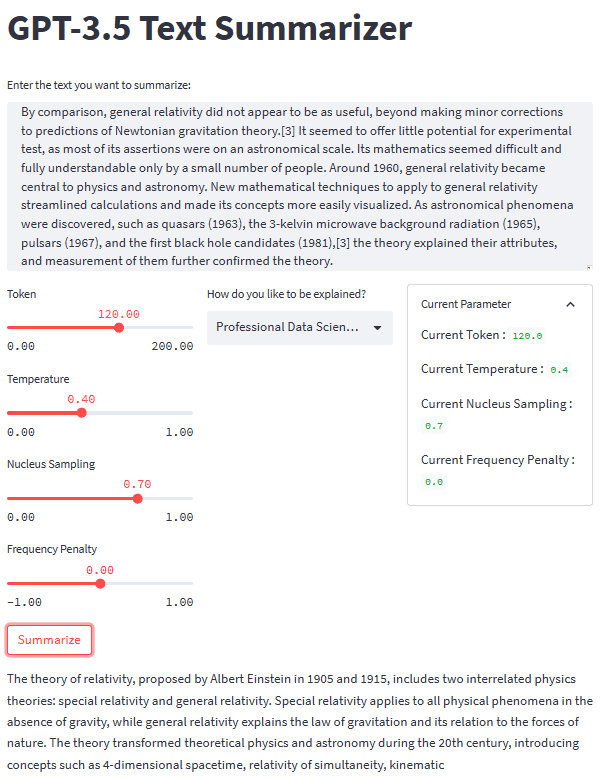

If the whole lot works properly, you will notice the next utility in your default browser.

Picture by Creator

So, what occurred within the code above? Let me briefly clarify every operate we used:

.st.title: Present the title textual content of the net utility..st.write: Writes the argument into the appliance; it could possibly be something however primarily a string textual content..st.text_area: Present an space for textual content enter that may be saved within the variable and used for the immediate for our textual content summarizer.st.columns: Object containers to supply side-by-side interplay..st.slider: Present a slider widget with set values that the person can work together with. The worth is saved on a variable used because the mannequin parameter..st.selectbox: Present a variety widget for customers to pick the summarization type they need. Within the instance above, we use 5 totally different kinds..st.expander: Present a container that customers can develop and maintain a number of objects..st.button: Present a button that runs the supposed operate when the person presses it.

As streamlit would routinely design the UI following the given code from high to backside, we may focus extra on the interplay.

With all of the items in place, let’s strive our summarization utility with a textual content instance. For our instance, I’d use the Theory of Relativity Wikipedia page textual content to be summarized. With a default parameter and second-grader type, we acquire the next outcome.

Albert Einstein was a really sensible scientist who got here up with two vital concepts about how the world works. The primary one, referred to as particular relativity, talks about how issues transfer when there is no such thing as a gravity. The second, referred to as normal relativity, explains how gravity works and the way it impacts issues in area like stars and planets. These concepts helped us perceive many issues in science, like how particles work together with one another and even helped us uncover black holes!

You may acquire a distinct outcome than the above one. Let’s strive the Housewives type and tweak the parameter a bit (Token 100, Temperature 0.5, Nucleus Sampling 0.5, Frequency Penalty 0.3).

The speculation of relativity is a set of physics theories proposed by Albert Einstein in 1905 and 1915. It consists of particular relativity, which applies to bodily phenomena with out gravity, and normal relativity, which explains the regulation of gravitation and its relation to the forces of nature. The speculation remodeled theoretical physics and astronomy within the twentieth century, introducing ideas like four-dimensional spacetime and predicting astronomical phenomena like black holes and gravitational waves.

As we are able to see, there’s a distinction in type for a similar textual content we offer. With a change immediate and parameter, our utility may be extra practical.

The general look of our textual content summarizer utility may be seen within the picture beneath.

Picture by Creator

That’s the tutorial on creating textual content summarizer utility growth with GPT-3.5. You might tweak the appliance even additional and deploy the appliance.

Generative AI is rising, and we should always make the most of the chance by making a improbable utility. On this tutorial, we’ll learn the way the GPT-3.5 OpenAI APIs work and learn how to use them to create a textual content summarizer utility with the assistance of Python and streamlit bundle.

Cornellius Yudha Wijaya is an information science assistant supervisor and knowledge author. Whereas working full-time at Allianz Indonesia, he likes to share Python and Information ideas by way of social media and writing media.

[ad_2]

Source link