[ad_1]

(cybermagician/Shutterstock)

A grand competitors of numerical illustration is shaping up as some firms promote floating level information sorts in deep studying, whereas others champion integer information sorts.

Synthetic Intelligence Is Rising In Recognition And Price

Synthetic intelligence (AI) is proliferating into each nook of our lives. The demand for services powered by AI algorithms has skyrocketed alongside the recognition of enormous language fashions (LLMs) like ChatGPT, and picture era fashions like Steady Diffusion. With this improve in reputation, nonetheless, comes a rise in scrutiny over the computational and environmental prices of AI, and notably the subfield of deep studying.

The first elements influencing the prices of deep studying are the scale and construction of the deep studying mannequin, the processor it’s operating on, and the numerical illustration of the info. State-of-the-art fashions have been rising in measurement for years now, with the compute necessities doubling each 6-10 months [1] for the final decade. Processor compute energy has elevated as nicely, however not practically quick sufficient to maintain up with the rising prices of the most recent AI fashions. This has led researchers to delve deeper into numerical illustration in makes an attempt to cut back the price of AI. Selecting the best numerical illustration, or information sort, has unimaginable implications on the ability consumption, accuracy, and throughput of a given mannequin. There may be, nonetheless, no singular reply to which information sort is finest for AI. Knowledge sort necessities fluctuate between the 2 distinct phases of deep studying: the preliminary coaching section and the following inference section.

Discovering the Candy Spot: Bit by Bit

In terms of growing AI effectivity, the strategy of first resort is quantization of the info sort. Quantization reduces the variety of bits required to symbolize the weights of a community. Decreasing the variety of bits not solely makes the mannequin smaller, however reduces the entire computation time, and thus reduces the ability required to do the computations. That is an important method for these pursuing environment friendly AI.

AI fashions are sometimes educated utilizing single precision 32-bit floating level (FP32) information sorts. It was discovered, nonetheless, that each one 32 bits aren’t at all times wanted to take care of accuracy. Makes an attempt at coaching fashions utilizing half precision 16-bit floating level (FP16) information sorts confirmed early success, and the race to search out the minimal variety of bits that maintains accuracy was on. Google got here out with their 16-bit mind float (BF16), and fashions being primed for inference have been typically quantized to 8-bit floating level (FP8) and integer (INT8) information sorts. There are two major approaches to quantizing a neural community: Submit-Coaching Quantization (PTQ) and Quantization-Conscious Coaching (QAT). Each strategies purpose to cut back the numerical precision of the mannequin to enhance computational effectivity, reminiscence footprint, and vitality consumption, however they differ in how and when the quantization is utilized, and the ensuing accuracy.

Submit-Coaching Quantization (PTQ) happens after coaching a mannequin with higher-precision representations (e.g., FP32 or FP16). It converts the mannequin’s weights and activations to lower-precision codecs (e.g., FP8 or INT8). Though easy to implement, PTQ may end up in important accuracy loss, notably in low-precision codecs, because the mannequin is not educated to deal with quantization errors. Quantization-Conscious Coaching (QAT) incorporates quantization throughout coaching, permitting the mannequin to adapt to diminished numerical precision. Ahead and backward passes simulate quantized operations, computing gradients regarding quantized weights and activations. Though QAT usually yields higher mannequin accuracy than PTQ, it requires coaching course of modifications and may be extra advanced to implement.

The 8-bit Debate

The 8-bit Debate

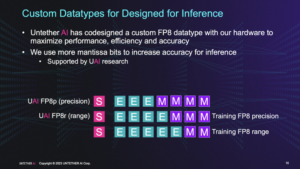

The AI trade has begun coalescing round two most popular candidates for quantized information sorts: INT8 and FP8. Each {hardware} vendor appears to have taken a facet. In mid 2022, a paper by Graphcore and AMD[2] floated the thought of an IEEE normal FP8 datatype. A subsequent joint paper with the same proposal from Intel, Nvidia, and Arm[3] adopted shortly. Different AI {hardware} distributors like Qualcomm[4, 5] and Untether AI[6] additionally wrote papers selling FP8 and reviewing its deserves versus INT8. However the debate is way from settled. Whereas there isn’t a singular reply for which information sort is finest for AI on the whole, there are superior and inferior information sorts in the case of varied AI processors and mannequin architectures with particular efficiency and accuracy necessities.

Integer Versus Floating Level

Floating level and integer information sorts are two methods to symbolize and retailer numerical values in pc reminiscence. There are just a few key variations between the 2 codecs that translate to benefits and drawbacks for varied neural networks in coaching and inference.

The variations all stem from their illustration. Floating level information sorts are used to symbolize actual numbers, which embody each integers and fractions. These numbers may be represented in scientific notation, with a base (mantissa) and an exponent.

Alternatively, integer information sorts are used to symbolize complete numbers (with out fractions). The representations lead to a really massive distinction in precision and dynamic vary. Floating level numbers have a wider dynamic vary then their integer counterparts. Integer numbers have a smaller vary and might solely symbolize complete numbers with a set degree of precision.

Integer vs Floating Level for Coaching

In deep studying, the numerical illustration necessities differ between the coaching and inference phases because of the distinctive computational calls for and priorities of every stage. Through the coaching section, the first focus is on updating the mannequin’s parameters via iterative optimization, which usually necessitates larger dynamic vary to make sure the correct propagation of gradients and the convergence of the training course of. Consequently, floating-point representations, equivalent to FP32, FP16, and even FP8 recently, must be employed throughout coaching to take care of ample dynamic vary. Alternatively, the inference section is worried with the environment friendly analysis of the educated mannequin on new enter information, the place the precedence shifts in the direction of minimizing computational complexity, reminiscence footprint, and vitality consumption. On this context, lower-precision numerical representations, equivalent to 8-bit integer (INT8) grow to be an possibility along with FP8. The final word choice is determined by the precise mannequin and underlying {hardware}.

Integer vs Floating Level for Inference

The perfect information sort for inference will fluctuate relying on the applying and the goal {hardware}. Actual-time and cellular inference companies have a tendency to make use of the smaller 8-bit information sorts to cut back reminiscence footprint, compute time, and vitality consumption whereas sustaining sufficient accuracy.

FP8 is rising more and more standard, as each main {hardware} vendor and cloud service supplier has addressed its use in deep studying. There are three major flavors of FP8, outlined by the ratio of exponents to mantissa. Having extra exponents will increase the dynamic vary of a knowledge sort, so FP8 E3M4 consisting of 1 signal bit, 3 exponent bits, and 4 mantissa bits, has the smallest dynamic vary of the bunch. This FP8 illustration sacrifices vary for precision by having extra bits reserved for mantissa, which will increase the accuracy. FP8 E4M3 has an additional exponent, and thus a larger vary. FP8 E5M2 has the best dynamic vary of the trio, making it the popular goal for coaching, which requires larger dynamic vary. Having a group of FP8 representations permits for a tradeoff between dynamic vary and precision, as some inference functions would profit from the elevated accuracy supplied by an additional mantissa bit.

INT8, however, successfully has 1 signal bit, 1 exponent bit, and 6 mantissa bits. This sacrifices a lot of its dynamic vary for precision. Whether or not or not this interprets into higher accuracy in comparison with FP8 is determined by the AI mannequin in query. And whether or not or not it interprets into higher energy effectivity will rely upon the underlying {hardware}. Analysis from Untether AI analysis[6] reveals that FP8 outperforms INT8 when it comes to accuracy, and for his or her {hardware}, efficiency and effectivity as nicely. Alternatively, Qualcomm analysis [5] had discovered that the accuracy good points of FP8 are usually not definitely worth the lack of effectivity in comparison with INT8 of their {hardware}. In the end, the choice for which information sort to pick out when quantizing for inference will typically come down to what’s finest supported in {hardware}, in addition to relying on the mannequin itself.

References

[1] Compute Tendencies Throughout Three Eras Of Machine Studying, https://arxiv.org/pdf/2202.05924.pdf

[2] 8-bit Numerical Codecs for Deep Neural Networks, https://arxiv.org/abs/2206.02915

[3] FP8 Codecs for Deep Studying, https://arxiv.org/abs/2209.05433

[4] FP8 Quantization: The Energy of the Exponent, https://arxiv.org/pdf/2208.09225.pdf

[5] FP8 verses INT8 for Environment friendly Deep Studying Inference, https://arxiv.org/abs/2303.17951

[6] FP8: Environment friendly AI Inference Utilizing Customized 8-bit Floating Level Knowledge Varieties, https://www.untether.ai/content-request-form-fp8-whitepaper

Waleed Atallah is a Product Supervisor answerable for silicon, boards, and programs at Untether AI. At the moment, he’s rolling out Untether AI’s second era silicon product, the speedAI household of gadgets. He was beforehand a Product Supervisor at Intel, the place he was answerable for high-end FPGAs with excessive bandwidth reminiscence. His pursuits span all issues compute effectivity, notably the mapping of software program to new {hardware} architectures. He obtained a B.S. diploma in Electrical Engineering from UCLA.

Associated

[ad_2]

Source link