[ad_1]

Photograph by Matheus Bertelli

This mild introduction to the machine studying fashions that energy ChatGPT, will begin on the introduction of Giant Language Fashions, dive into the revolutionary self-attention mechanism that enabled GPT-3 to be educated, after which burrow into Reinforcement Studying From Human Suggestions, the novel method that made ChatGPT distinctive.

ChatGPT is an extrapolation of a category of machine studying Pure Language Processing fashions often called Giant Language Mannequin (LLMs). LLMs digest enormous portions of textual content information and infer relationships between phrases inside the textual content. These fashions have grown over the previous few years as we’ve seen developments in computational energy. LLMs improve their functionality as the dimensions of their enter datasets and parameter house improve.

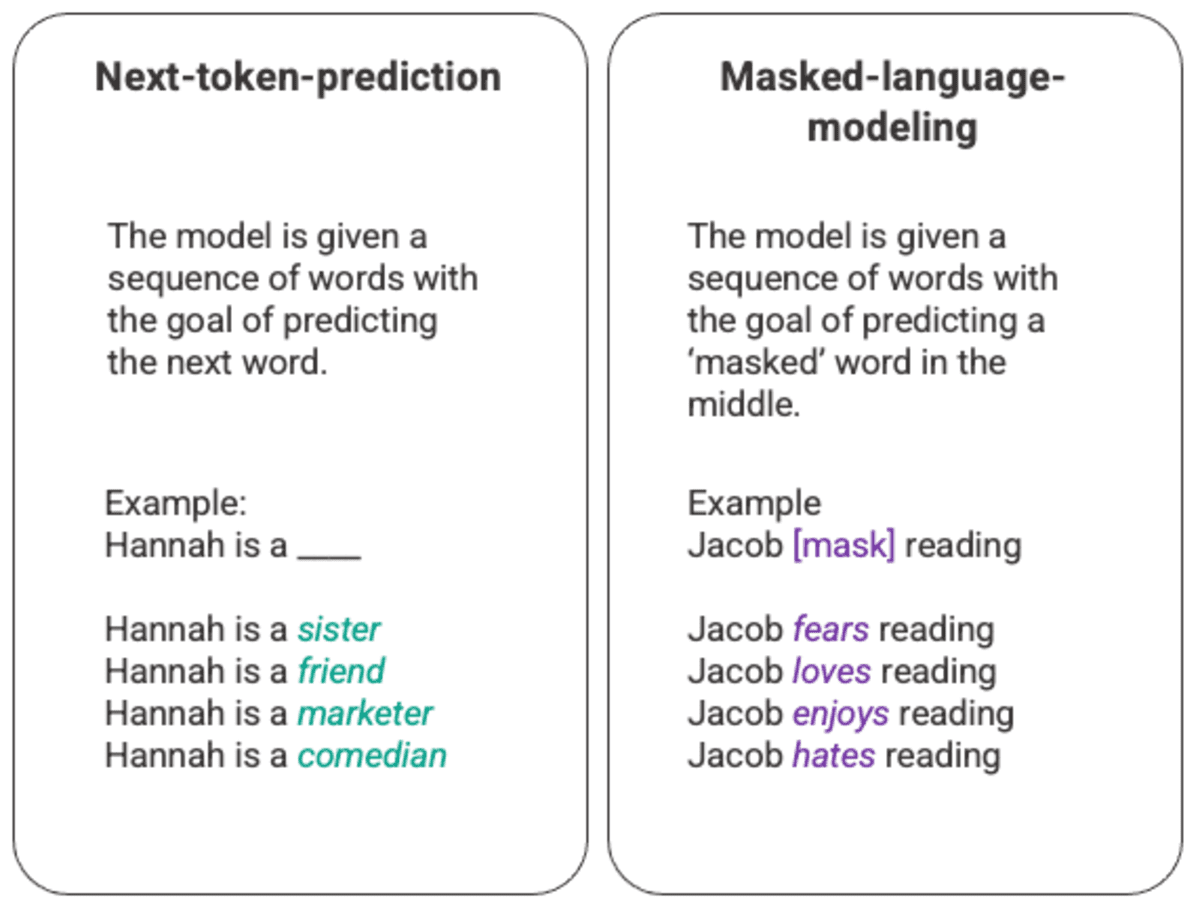

Probably the most fundamental coaching of language fashions includes predicting a phrase in a sequence of phrases. Mostly, that is noticed as both next-token-prediction and masked-language-modeling.

Arbitrary instance of next-token-prediction and masked-language-modeling generated by the creator.

On this fundamental sequencing method, usually deployed by means of a Lengthy-Quick-Time period-Reminiscence (LSTM) mannequin, the mannequin is filling within the clean with probably the most statistically possible phrase given the encircling context. There are two main limitations with this sequential modeling construction.

- The mannequin is unable to worth a few of the surrounding phrases greater than others. Within the above instance, whereas ‘studying’ might most frequently affiliate with ‘hates’, within the database ‘Jacob’ could also be such an avid reader that the mannequin ought to give extra weight to ‘Jacob’ than to ‘studying’ and select ‘love’ as a substitute of ‘hates’.

- The enter information is processed individually and sequentially fairly than as a complete corpus. Which means when an LSTM is educated, the window of context is mounted, extending solely past a person enter for a number of steps within the sequence. This limits the complexity of the relationships between phrases and the meanings that may be derived.

In response to this problem, in 2017 a group at Google Mind launched transformers. Not like LSTMs, transformers can course of all enter information concurrently. Utilizing a self-attention mechanism, the mannequin may give various weight to completely different components of the enter information in relation to any place of the language sequence. This function enabled huge enhancements in infusing which means into LLMs and permits processing of considerably bigger datasets.

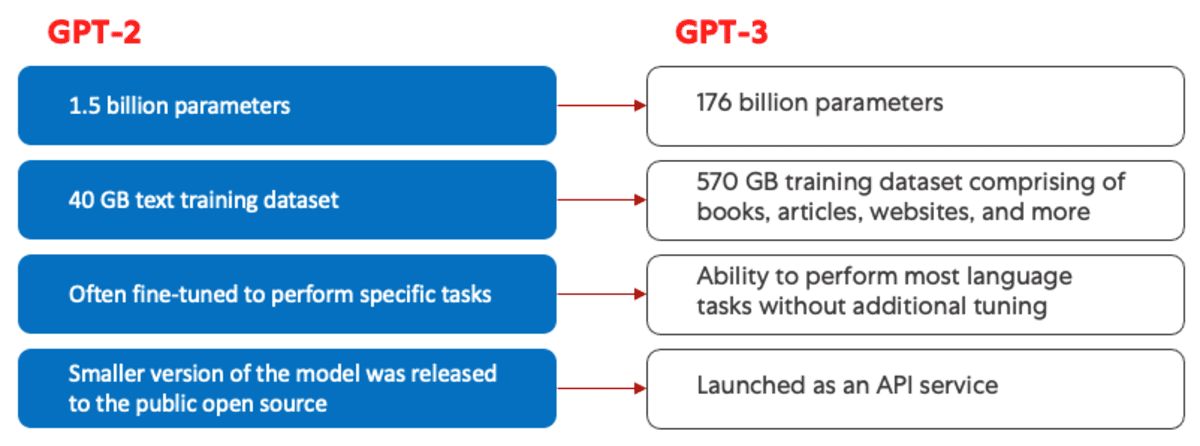

Generative Pre-training Transformer (GPT) fashions had been first launched in 2018 by openAI as GPT-1. The fashions continued to evolve over 2019 with GPT-2, 2020 with GPT-3, and most lately in 2022 with InstructGPT and ChatGPT. Previous to integrating human suggestions into the system, the best development within the GPT mannequin evolution was pushed by achievements in computational effectivity, which enabled GPT-3 to be educated on considerably extra information than GPT-2, giving it a extra various information base and the potential to carry out a wider vary of duties.

Comparability of GPT-2 (left) and GPT-3 (proper). Generated by the creator.

All GPT fashions leverage the transformer structure, which implies they’ve an encoder to course of the enter sequence and a decoder to generate the output sequence. Each the encoder and decoder have a multi-head self-attention mechanism that enables the mannequin to differentially weight components of the sequence to deduce which means and context. As well as, the encoder leverages masked-language-modeling to grasp the connection between phrases and produce extra understandable responses.

The self-attention mechanism that drives GPT works by changing tokens (items of textual content, which is usually a phrase, sentence, or different grouping of textual content) into vectors that symbolize the significance of the token within the enter sequence. To do that, the mannequin,

- Creates a question, key, and worth vector for every token within the enter sequence.

- Calculates the similarity between the question vector from the first step and the important thing vector of each different token by taking the dot product of the 2 vectors.

- Generates normalized weights by feeding the output of step 2 right into a softmax function.

- Generates a last vector, representing the significance of the token inside the sequence by multiplying the weights generated in step 3 by the worth vectors of every token.

The ‘multi-head’ consideration mechanism that GPT makes use of is an evolution of self-attention. Quite than performing steps 1–4 as soon as, in parallel the mannequin iterates this mechanism a number of occasions, every time producing a brand new linear projection of the question, key, and worth vectors. By increasing self-attention on this manner, the mannequin is able to greedy sub-meanings and extra complicated relationships inside the enter information.

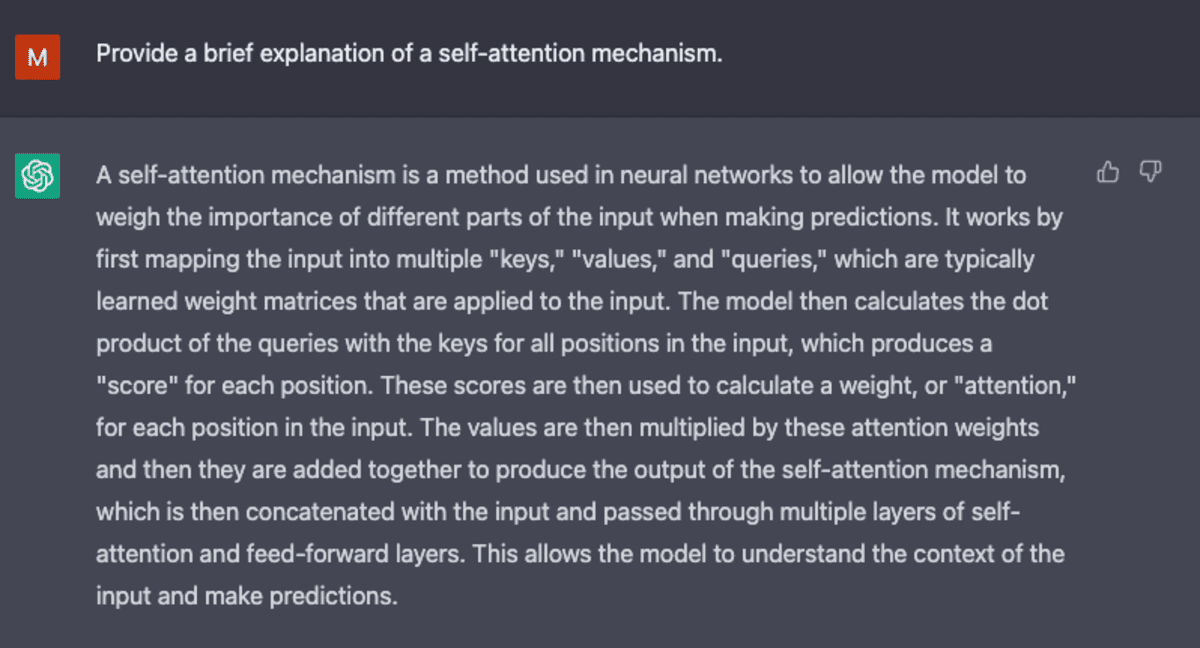

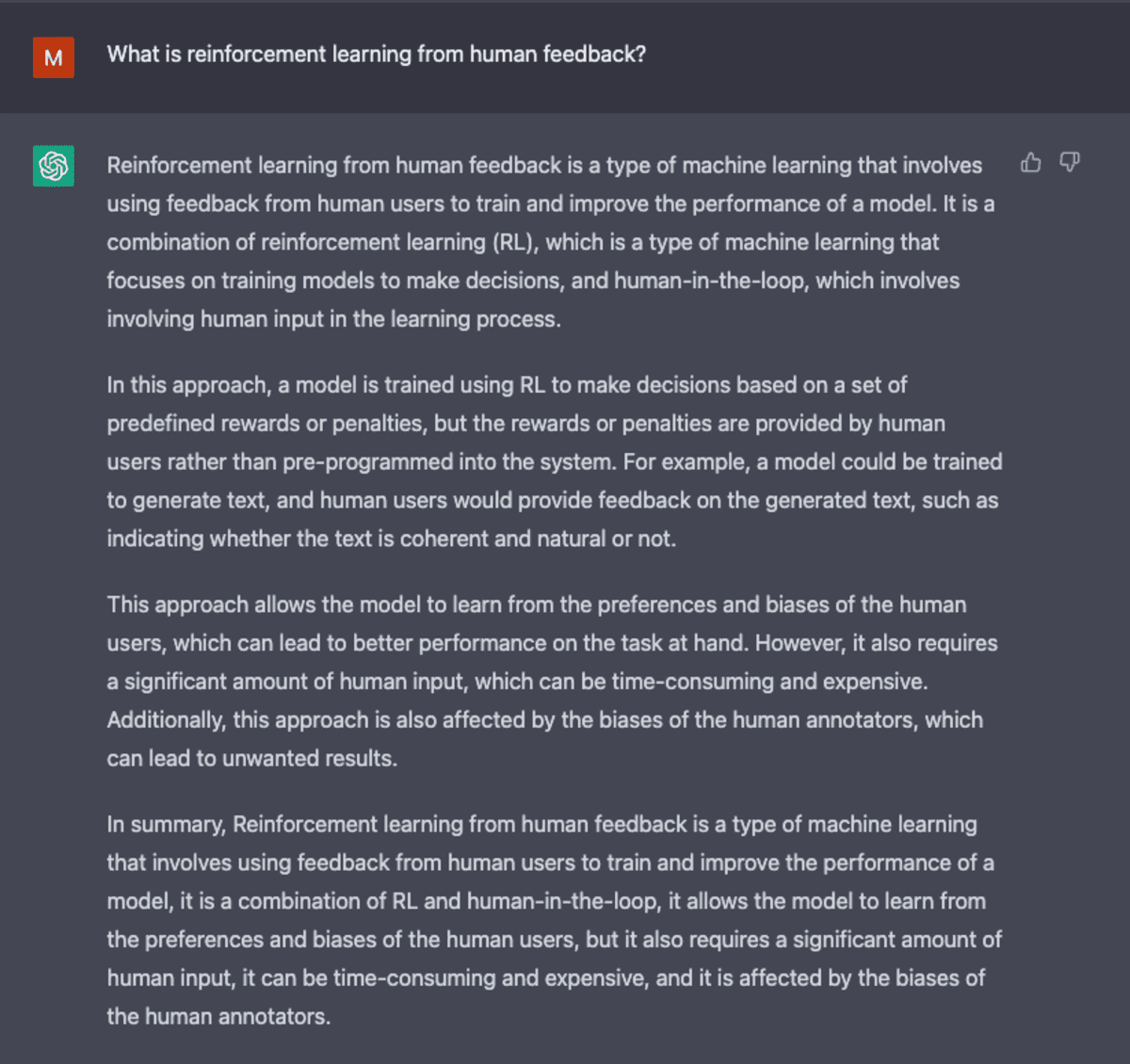

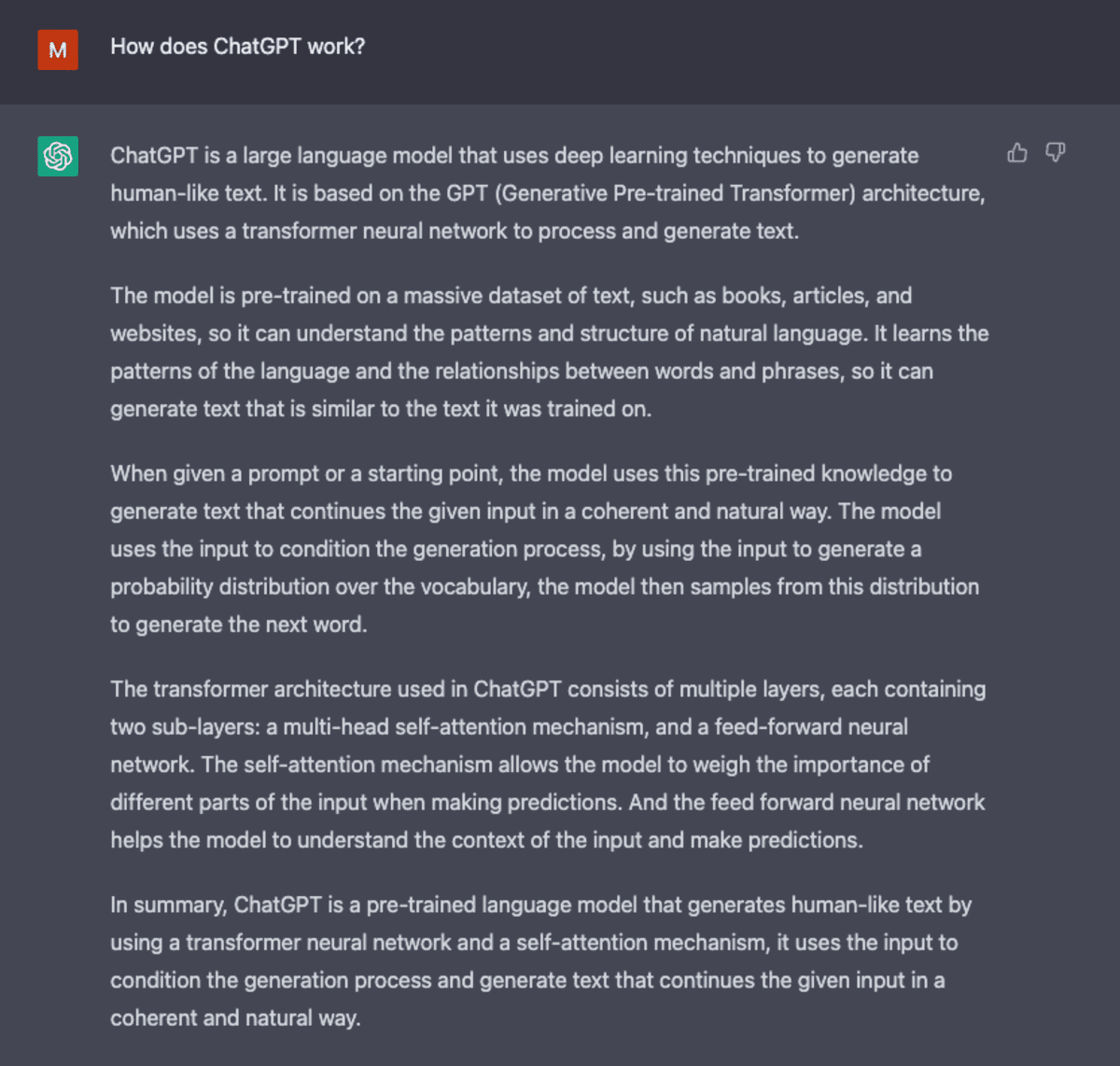

Screenshot from ChatGPT generated by the creator.

Though GPT-3 launched exceptional developments in pure language processing, it’s restricted in its skill to align with person intentions. For instance, GPT-3 might produce outputs that

- Lack of helpfulness which means they do not observe the person’s specific directions.

- Comprise hallucinations that replicate non-existing or incorrect information.

- Lack interpretability making it tough for people to grasp how the mannequin arrived at a specific choice or prediction.

- Embrace poisonous or biased content material that’s dangerous or offensive and spreads misinformation.

Progressive coaching methodologies had been launched in ChatGPT to counteract a few of these inherent points of ordinary LLMs.

ChatGPT is a derivative of InstructGPT, which launched a novel strategy to incorporating human suggestions into the coaching course of to raised align the mannequin outputs with person intent. Reinforcement Studying from Human Suggestions (RLHF) is described in depth in openAI’s 2022 paper Coaching language fashions to observe directions with human suggestions and is simplified beneath.

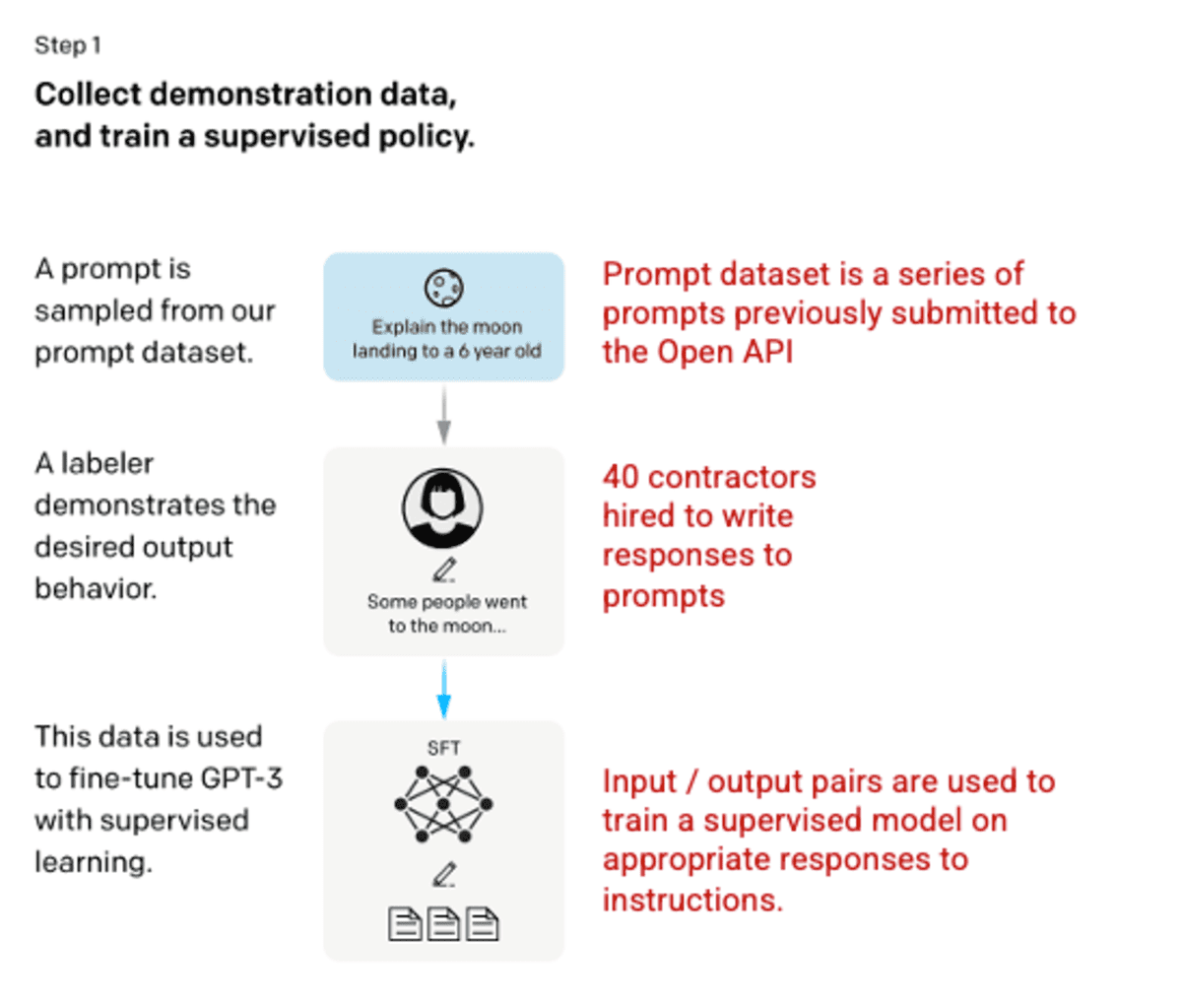

Step 1: Supervised Tremendous Tuning (SFT) Mannequin

The primary improvement concerned fine-tuning the GPT-3 mannequin by hiring 40 contractors to create a supervised coaching dataset, by which the enter has a recognized output for the mannequin to be taught from. Inputs, or prompts, had been collected from precise person entries into the Open API. The labelers then wrote an acceptable response to the immediate thus making a recognized output for every enter. The GPT-3 mannequin was then fine-tuned utilizing this new, supervised dataset, to create GPT-3.5, additionally referred to as the SFT mannequin.

With a purpose to maximize range within the prompts dataset, solely 200 prompts might come from any given person ID and any prompts that shared lengthy widespread prefixes had been eliminated. Lastly, all prompts containing personally identifiable data (PII) had been eliminated.

After aggregating prompts from OpenAI API, labelers had been additionally requested to create pattern prompts to fill-out classes by which there was solely minimal actual pattern information. The classes of curiosity included

- Plain prompts: any arbitrary ask.

- Few-shot prompts: directions that include a number of question/response pairs.

- Person-based prompts: correspond to a particular use-case that was requested for the OpenAI API.

When producing responses, labelers had been requested to do their finest to deduce what the instruction from the person was. The paper describes the primary three ways in which prompts request data.

- Direct: “Inform me about…”

- Few-shot: Given these two examples of a narrative, write one other story about the identical subject.

- Continuation: Given the beginning of a narrative, end it.

The compilation of prompts from the OpenAI API and hand-written by labelers resulted in 13,000 enter / output samples to leverage for the supervised mannequin.

Picture (left) inserted from Coaching language fashions to observe directions with human suggestions OpenAI et al., 2022 https://arxiv.org/pdf/2203.02155.pdf. Extra context added in crimson (proper) by the creator.

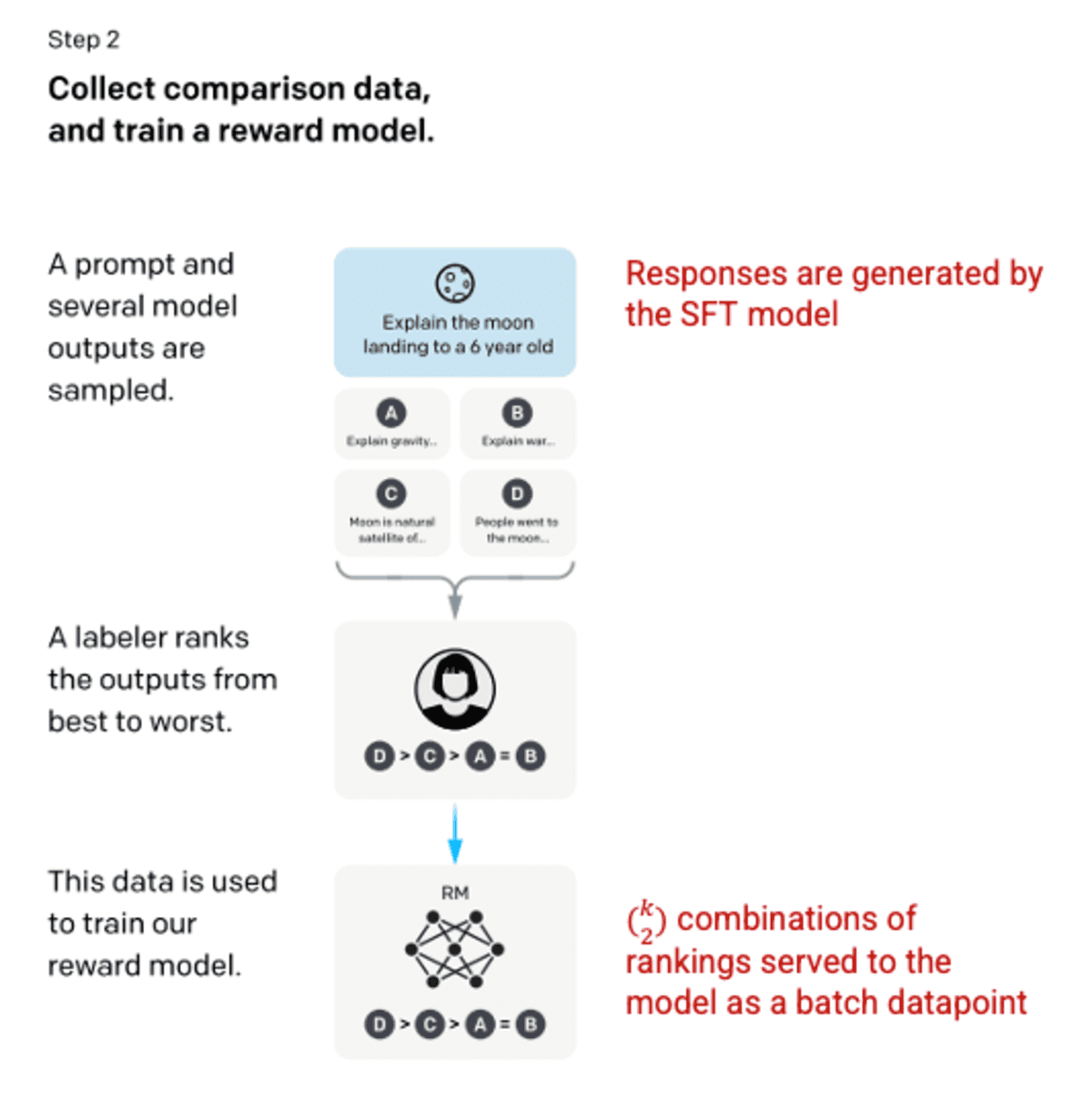

Step 2: Reward Mannequin

After the SFT mannequin is educated in step 1, the mannequin generates higher aligned responses to person prompts. The following refinement comes within the type of coaching a reward mannequin by which a mannequin enter is a collection of prompts and responses, and the output is a scaler worth, referred to as a reward. The reward mannequin is required with a view to leverage Reinforcement Studying by which a mannequin learns to supply outputs to maximise its reward (see step 3).

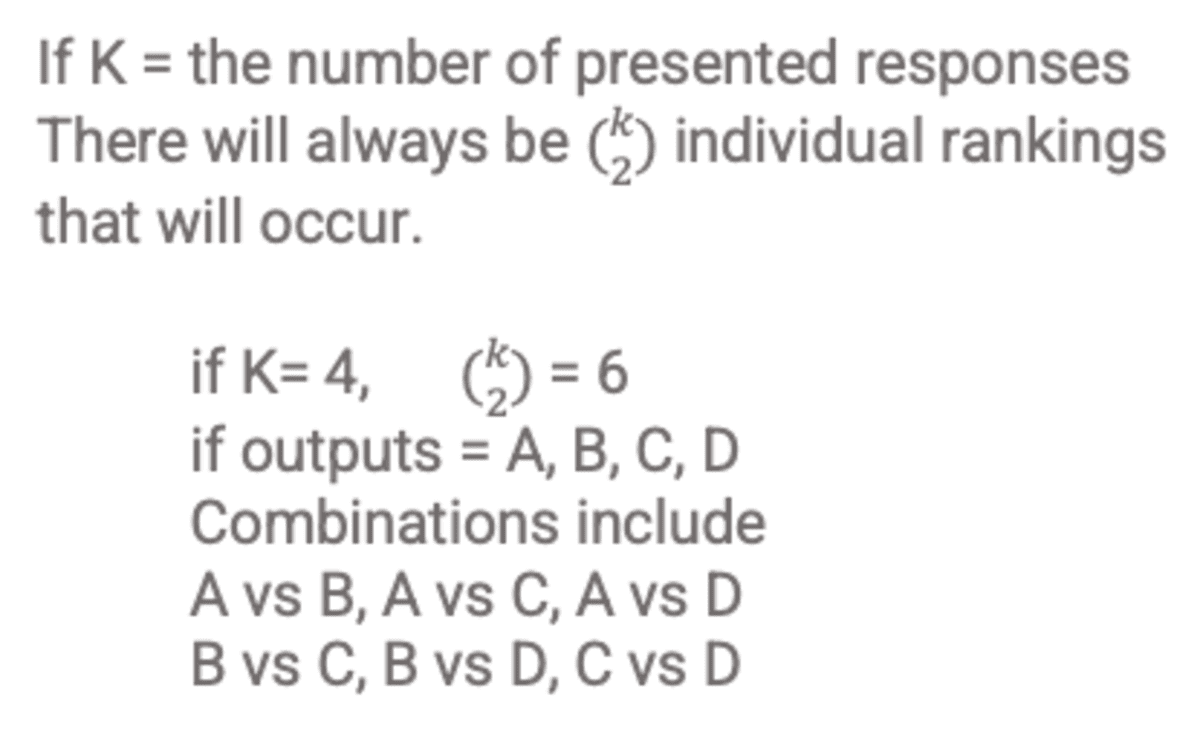

To coach the reward mannequin, labelers are offered with 4 to 9 SFT mannequin outputs for a single enter immediate. They’re requested to rank these outputs from finest to worst, creating mixtures of output rating as follows.

Instance of response rating mixtures. Generated by the creator.

Together with every mixture within the mannequin as a separate datapoint led to overfitting (failure to extrapolate past seen information). To unravel, the mannequin was constructed leveraging every group of rankings as a single batch datapoint.

Picture (left) inserted from Coaching language fashions to observe directions with human suggestions OpenAI et al., 2022 https://arxiv.org/pdf/2203.02155.pdf. Extra context added in crimson (proper) by the creator.

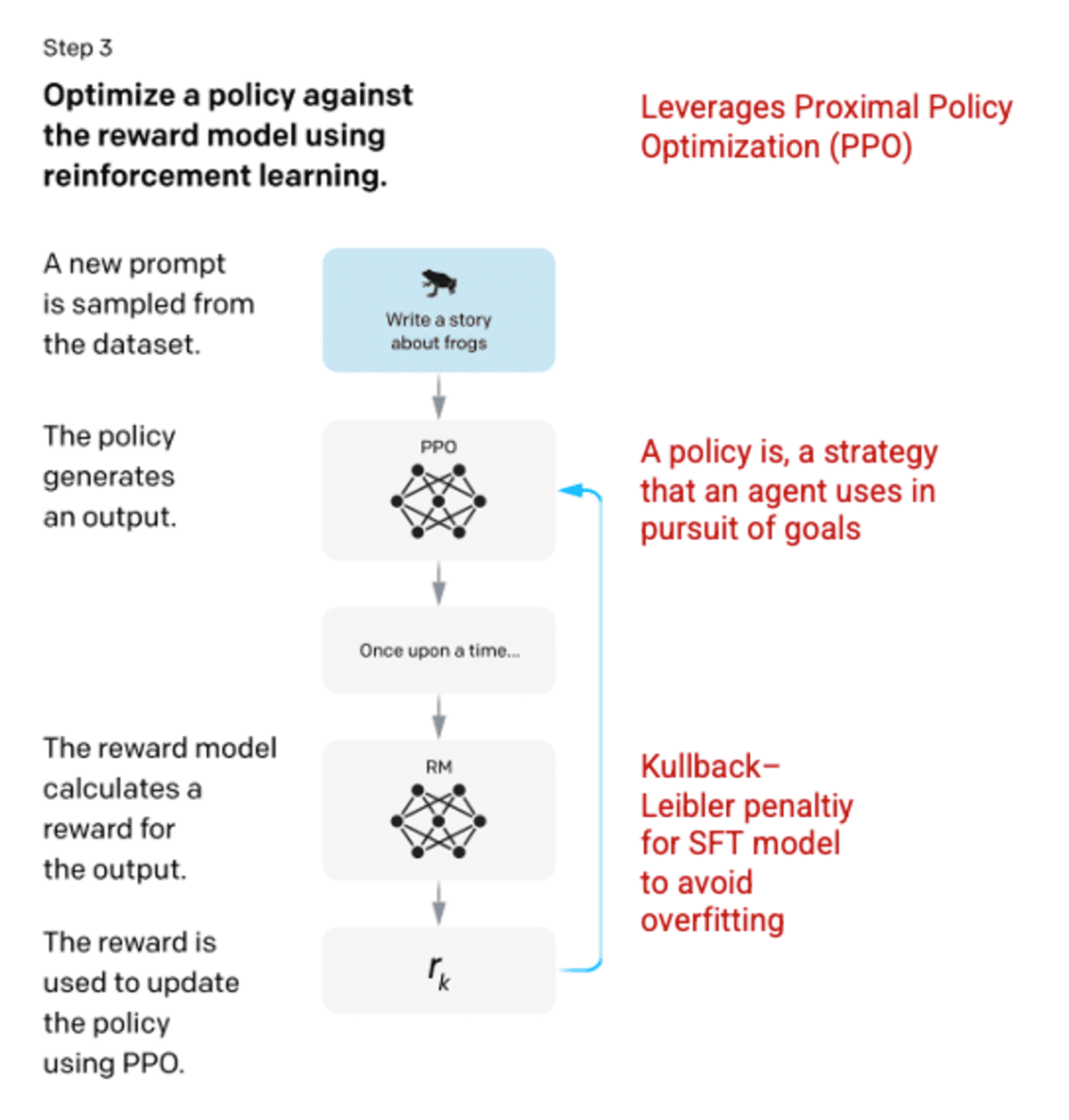

Step 3: Reinforcement Studying Mannequin

Within the last stage, the mannequin is offered with a random immediate and returns a response. The response is generated utilizing the ‘coverage’ that the mannequin has discovered in step 2. The coverage represents a method that the machine has discovered to make use of to attain its aim; on this case, maximizing its reward. Primarily based on the reward mannequin developed in step 2, a scaler reward worth is then decided for the immediate and response pair. The reward then feeds again into the mannequin to evolve the coverage.

In 2017, Schulman et al. launched Proximal Policy Optimization (PPO), the methodology that’s utilized in updating the mannequin’s coverage as every response is generated. PPO incorporates a per-token Kullback–Leibler (KL) penalty from the SFT mannequin. The KL divergence measures the similarity of two distribution features and penalizes excessive distances. On this case, utilizing a KL penalty reduces the space that the responses may be from the SFT mannequin outputs educated in step 1 to keep away from over-optimizing the reward mannequin and deviating too drastically from the human intention dataset.

Picture (left) inserted from Coaching language fashions to observe directions with human suggestions OpenAI et al., 2022 https://arxiv.org/pdf/2203.02155.pdf. Extra context added in crimson (proper) by the creator.

Steps 2 and three of the method may be iterated by means of repeatedly although in apply this has not been performed extensively.

Screenshot from ChatGPT generated by the creator.

Analysis of the Mannequin

Analysis of the mannequin is carried out by setting apart a take a look at set throughout coaching that the mannequin has not seen. On the take a look at set, a collection of evaluations are carried out to find out if the mannequin is best aligned than its predecessor, GPT-3.

Helpfulness: the mannequin’s skill to deduce and observe person directions. Labelers most popular outputs from InstructGPT over GPT-3 85 ± 3% of the time.

Truthfulness: the mannequin’s tendency for hallucinations. The PPO mannequin produced outputs that confirmed minor will increase in truthfulness and informativeness when assessed utilizing the TruthfulQA dataset.

Harmlessness: the mannequin’s skill to keep away from inappropriate, derogatory, and denigrating content material. Harmlessness was examined utilizing the RealToxicityPrompts dataset. The take a look at was carried out below three circumstances.

- Instructed to offer respectful responses: resulted in a big lower in poisonous responses.

- Instructed to offer responses, with none setting for respectfulness: no vital change in toxicity.

- Instructed to offer poisonous response: responses had been actually considerably extra poisonous than the GPT-3 mannequin.

For extra data on the methodologies utilized in creating ChatGPT and InstructGPT, learn the unique paper revealed by OpenAI Coaching language fashions to observe directions with human suggestions, 2022 https://arxiv.org/pdf/2203.02155.pdf.

Screenshot from ChatGPT generated by the creator.

Comfortable studying!

- https://openai.com/blog/chatgpt/

- https://arxiv.org/pdf/2203.02155.pdf

- https://medium.com/r/?url=https%3A%2F%2Fdeepai.org%2Fmachine-learning-glossary-and-terms%2Fsoftmax-layer

- https://www.assemblyai.com/blog/how-chatgpt-actually-works/

- https://medium.com/r/?url=https%3A%2F%2Ftowardsdatascience.com%2Fproximal-policy-optimization-ppo-explained-abed1952457b

Molly Ruby is a Knowledge Scientist at Mars and a content material author.

Original. Reposted with permission.

[ad_2]

Source link