[ad_1]

Supply: Adobe Inventory

Do you bear in mind the primary time you began to construct some SQL queries to analyse your knowledge? I’m positive more often than not you simply needed to see the “High promoting merchandise” or “Rely of product visits by weekly”. Why write SQL queries as a substitute of simply asking what you’ve in your thoughts in pure language?

That is now doable because of the latest developments in NLP. Now you can not simply use the LLM (Massive Language Mannequin) but additionally train them new expertise. That is known as switch studying. You should utilize a pretrained mannequin as a place to begin. Even with smaller labeled datasets you will get nice performances in comparison with coaching on the info alone.

On this tutorial we’re going to do switch studying with text-to-text technology mannequin T5 by Google with our customized knowledge in order that it could actually convert fundamental inquiries to SQL queries. We are going to add a brand new process to T5 known as: translate English to SQL. On the finish of this text you’ll have a educated mannequin that may translate the next pattern question:

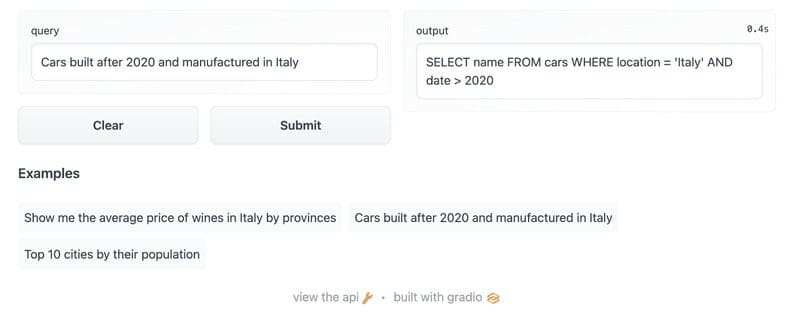

Vehicles constructed after 2020 and manufactured in Italy

into the next SQL question:

SELECT title FROM vehicles WHERE location = 'Italy' AND date > 2020

You could find the Gradio demo here and the Layer Mission here.

Not like the widespread language to language translation datasets, we are able to construct customized English to SQL translation pairs programmatically with the assistance of templates. Now, time to provide you with some templates:

We are able to construct our operate which is able to use these templates and generate our dataset.

As you may see we have now used Layer @dataset decorator right here. We are able to now go this operate to Layer simply with:

layer.run([build_dataset])

As soon as the run is full, we are able to begin constructing our customized dataset loader to high quality tune T5.

Our dataset is principally a PyTorch Dataset implementation particular to our customized constructed dataset.

Our dataset is prepared and registered to Layer. Now we’re going to develop the high quality tuning logic. Enhance the operate with @model and go it to Layer. This trains the mannequin on Layer infra and registers it beneath our challenge.

Right here we use three separate Layer decorators:

@model: Tells Layer that this operate trains an ML mannequin@fabric: Tells Layer the computation sources (CPU, GPU and so on.) wanted to coach the mannequin. T5 is an enormous mannequin and we want GPU to high quality tune it. Here’s a record of the available fabrics you should utilize with Layer.@pip_requirements: The Python packages wanted to high quality tune our mannequin.

Now we are able to simply go the tokenizer and mannequin coaching features to Layer to coach our mannequin on a distant GPU occasion.

layer.run([build_tokenizer, build_model], debug=True)

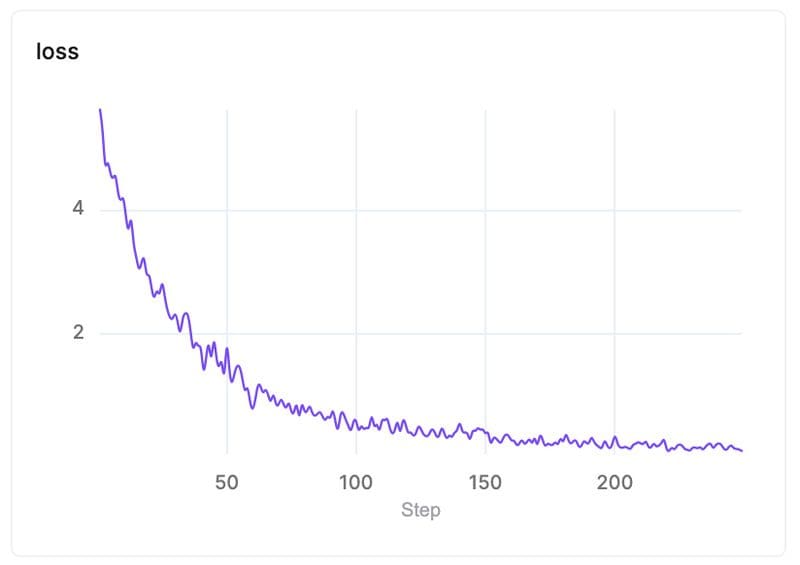

As soon as the coaching is full, we are able to discover our fashions and the metrics within the Layer UI. Right here is our loss curve:

Gradio is the quickest strategy to demo your machine studying mannequin with a pleasant net interface in order that anybody can use it, wherever! We are going to construct an interactive demo with Gradio to offer a UI for folks need to attempt our mannequin.

Let’s get to coding. Create a Python file known as app.py and put the next code:

Within the above code:

- We fetch the our fine-tuned mannequin and the associated tokenizer from Layer

- Create a easy UI with Gradio: an enter textfield for question enter and an output textfield to show the expected SQL question

We are going to want some additional libraries for this small Python utility, so create a necessities.txt file with the next content material:

layer-sdk==0.9.350435

torch==1.11.0

sentencepiece==0.1.96

We’re able to publish our Gradio app now:

- Go to Hugging face and create a space.

- Don’t overlook to pick Gradio because the House SDK

Now clone your repo into your native listing with:

$ git clone [YOUR_HUGGINGFACE_SPACE_URL]

Put the necessities.txtand app.py file into to cloned listing and run the next instructions in your terminal:

$ git add app.py

$ git add necessities.txt

$ git commit -m "Add utility recordsdata"

$ git push

Now head over to your Hugging face house, you will notice your instance as soon as the app is deployed

We discovered the way to high quality tune a big language mannequin to show them a brand new talent. Now you can design your individual process and high quality tune T5 to your personal use.

Take a look at the complete Fine Tuning T5 challenge and modify it to your personal process.

Assets

Mehmet Ecevit is Co-founder & CEO at Layer: Collaborative Machine Studying.

[ad_2]

Source link