[ad_1]

Picture by Writer

The success of ChatGPT and GPT-4 have proven how giant language fashions educated with reinforcement may end up in scalable and highly effective NLP purposes.

Nevertheless, the usefulness of the response depends upon the immediate, which led to customers exploring the immediate engineering house. As well as, most real-world NLP use instances want extra sophistication than a single ChatGPT session. And right here’s the place a library like LangChain might help!

LangChain is a Python library that helps you leverage giant language fashions to construct customized NLP purposes.

On this information, we’ll discover what LangChain is and what you possibly can construct with it. We’ll additionally get our ft moist by constructing a easy question-answering app with LangChain.

Let’s get began!

LangChain, created by Harrison Chase, is a Python library that gives out-of-the-box assist to construct NLP purposes utilizing LLMs. You’ll be able to join to varied information and computation sources, and construct purposes that carry out NLP duties on domain-specific information sources, non-public repositories, and far more.

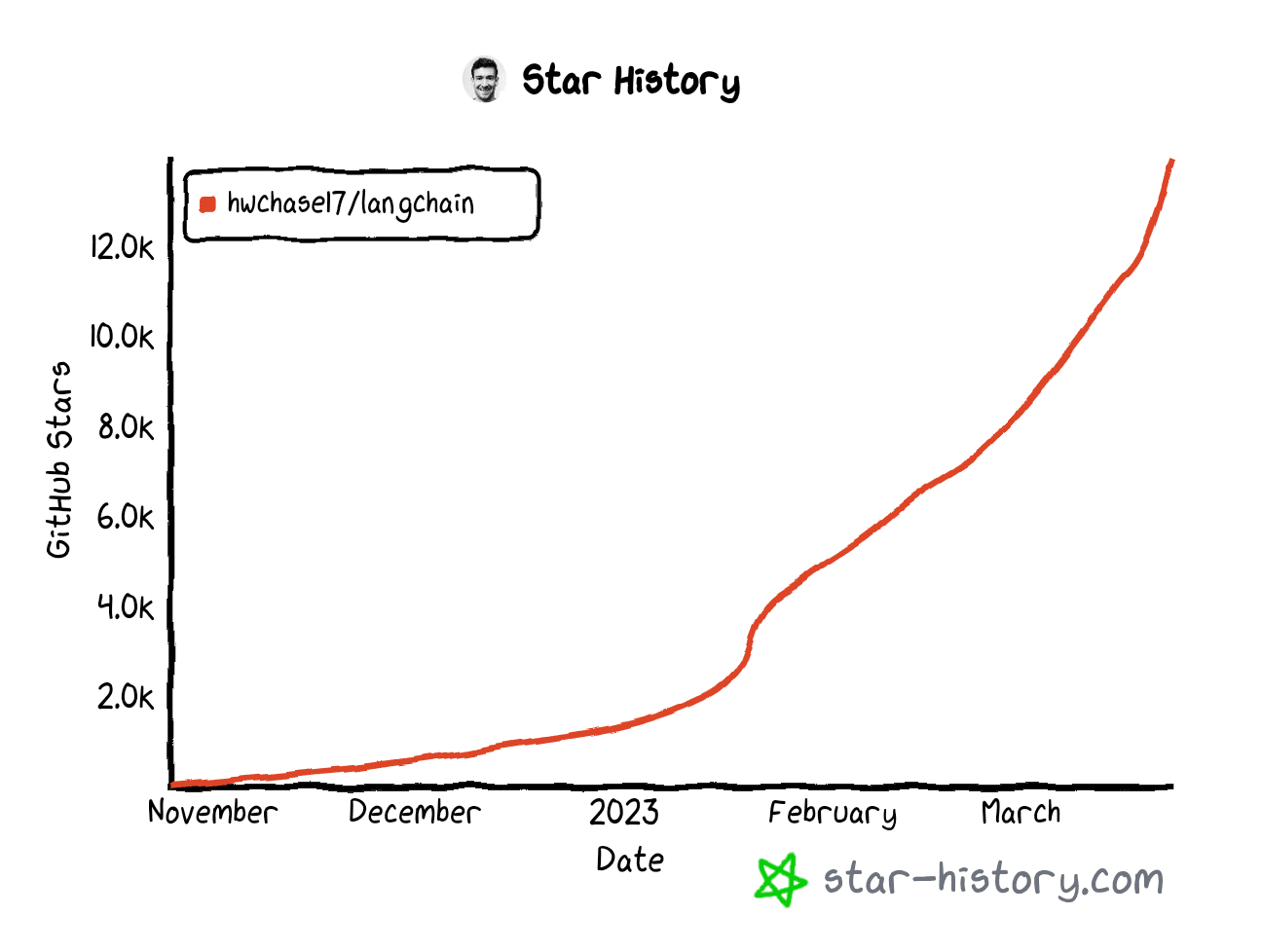

As of writing this text (in March 2023), the LangChain GitHub repository has over 14,000 stars with greater than 270 contributors from internationally.

LangChain Github Star Historical past | Generated on star-history.com

Fascinating purposes you possibly can construct utilizing LangChain embrace (however aren’t restricted to):

- Chatbots

- Summarization and Query answering over particular domains

- Apps that question databases to fetch information after which course of them

- Brokers that clear up particular like math and reasoning puzzles

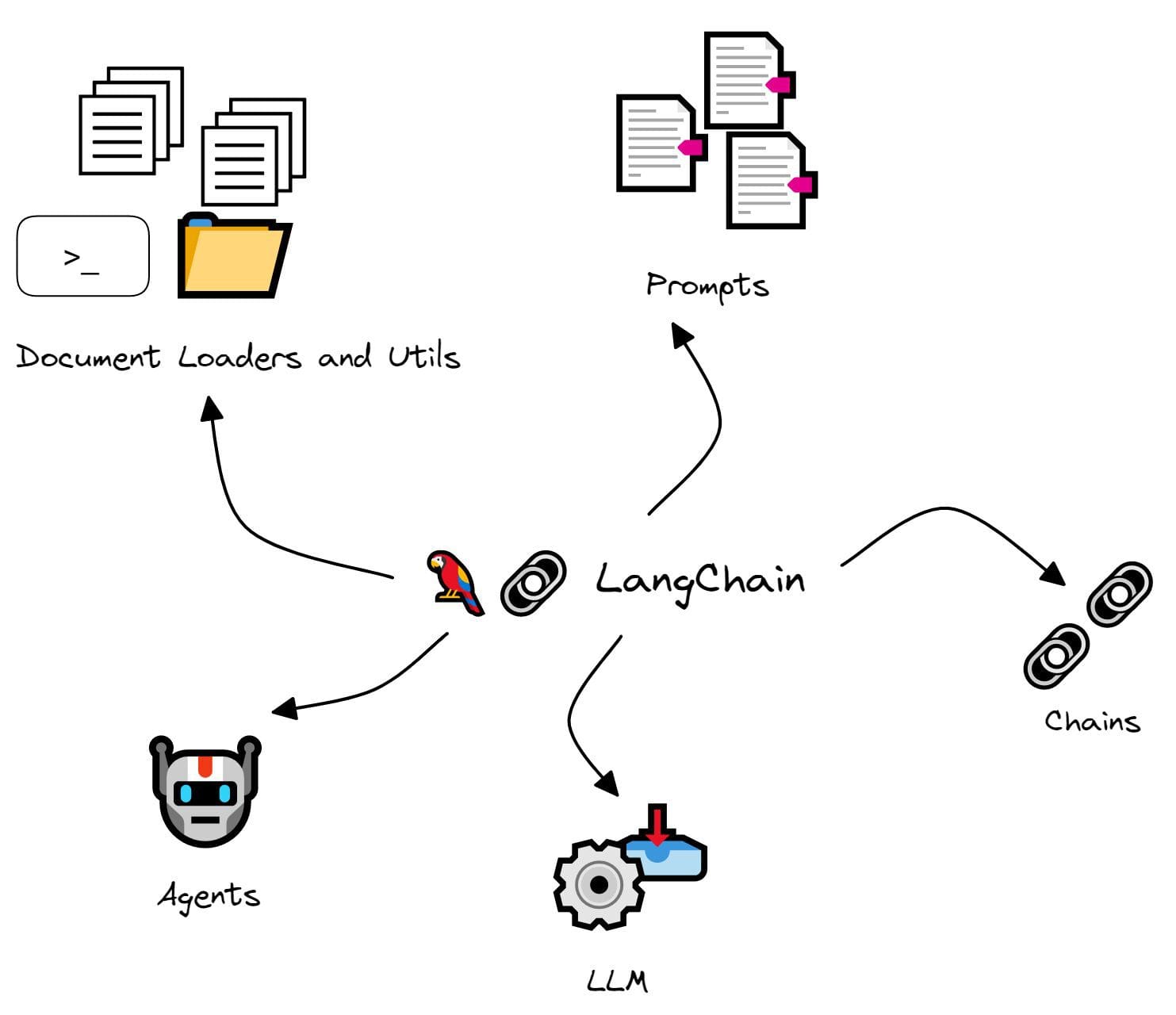

Subsequent let’s check out a number of the modules in LangChain:

Picture by Writer

LLM

LLM is the elemental element of LangChain. It’s primarily a wrapper round a big language mannequin that helps use the performance and functionality of a particular giant language mannequin.

Chains

As talked about, LLM is the elemental unit in LangChain. Nevertheless, because the identify LangChain suggests, you possibly can chain collectively LLM calls relying on particular duties.

For instance, you could have to get information from a particular URL, summarize the returned textual content, and reply questions utilizing the generated abstract.

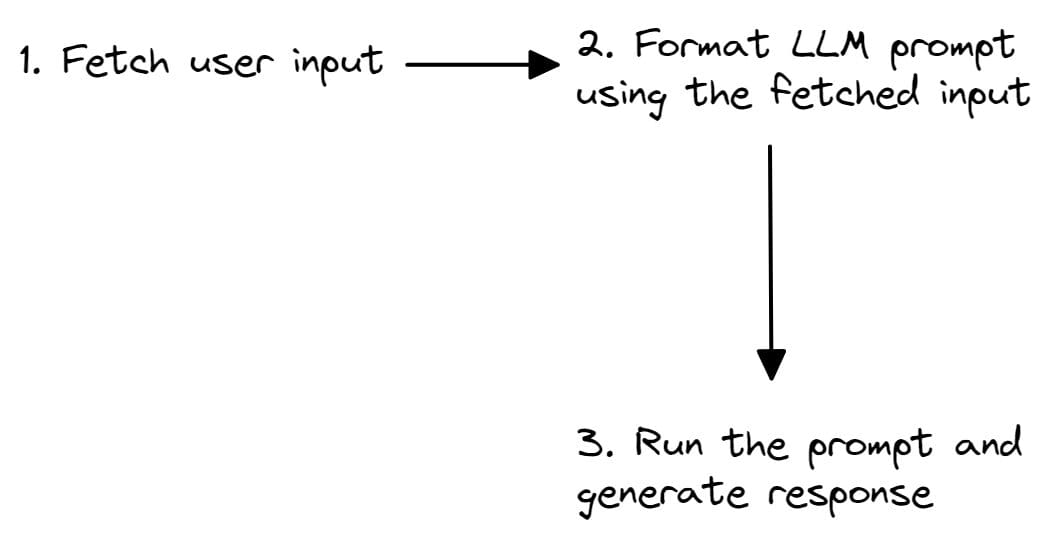

The chain can be easy. You might have to learn in consumer enter which is then used to assemble the immediate. Which may then be used to generate a response.

Picture by Writer

Prompts

Prompts are on the core of any NLP software. Even in a ChatGPT session, the reply is simply as useful because the immediate. To that finish, LangChain offers immediate templates that you should utilize to format inputs and lots of different utilities.

Doc Loaders and Utils

LangChain’s Doc Loaders and Utils modules facilitate connecting to sources of knowledge and computation, respectively.

Suppose you have got a big corpus of textual content on economics that you just’d wish to construct an NLP app over. Your corpus could also be a mixture of textual content information, PDF paperwork, HTML net pages, pictures, and extra. At present, doc loaders leverage the Python library Unstructured to transform these uncooked information sources into textual content that may be processed.

The utils module offers Bash and Python interpreter periods amongst others. These are appropriate for purposes the place it’ll assist to work together instantly with the underlying system. Or once we want code snippets to compute a particular mathematical amount or clear up an issue as a substitute of computing solutions as soon as.

Brokers

We talked about that “chains” might help chain collectively a sequence of LLM calls. In some duties, nonetheless, the sequence of calls is commonly not deterministic. And the subsequent step will possible be depending on the consumer enter and the response within the earlier steps.

For such purposes, the LangChain library offers “Brokers” that may take actions primarily based on inputs alongside the best way as a substitute of a hardcoded deterministic sequence.

Along with the above, LangChain additionally affords integration with vector databases and has reminiscence capabilities for sustaining state between LLM calls, and far more.

Now that we’ve gained an understanding of LangChain, let’s construct a question-answering app utilizing LangChain in 5 simple steps:

Step 1 – Setting Up the Growth Setting

Earlier than we get coding, let’s arrange the event atmosphere. I assume you have already got Python put in in your working atmosphere.

Now you can set up the LangChain library utilizing pip:

As we’ll be utilizing OpenAI’s language fashions, we have to set up the OpenAI SDK as effectively:

Step 2 – Setting the OPENAI_API_KEY as an Setting Variable

Subsequent, signal into your OpenAI account. Navigate to account settings > View API Keys. Generate a secret key and replica it.

In your Python script, use the os module and faucet into the dictionary of atmosphere variables, os.environ. Set the “OPENAI_API_KEY” to your to the key API key that you just simply copied:

import os

os.environ["OPENAI_API_KEY"] = "your-api-key-here"

Step 3 – Easy LLM Name Utilizing LangChain

Now that we’ve put in the required libraries, let’s examine the right way to make a easy LLM name utilizing LangChain.

To take action, let’s import the OpenAI wrapper. On this instance, we’ll use the text-davinci-003 mannequin:

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003")

“text-davinci-003: Can do any language process with higher high quality, longer output, and constant instruction-following than the curie, babbage, or ada fashions. Additionally helps inserting completions inside textual content.” – OpenAI Docs

Let’s outline a query string and generate a response:

query = "Which is the perfect programming language to be taught in 2023?"

print(llm(query))

Output >>

It's tough to foretell which programming language would be the hottest in 2023. Nevertheless, the preferred programming languages at this time are JavaScript, Python, Java, C++, and C#, so these are more likely to stay in style for the foreseeable future. Moreover, newer languages comparable to Rust, Go, and TypeScript are gaining traction and will turn into in style selections sooner or later.

Step 4 – Making a Immediate Template

Let’s ask one other query on the highest sources to be taught a brand new programming language, say, Golang:

query = "What are the highest 4 sources to be taught Golang in 2023?"

print(llm(query))

Output >>

1. The Go Programming Language by Alan A. A. Donovan and Brian W. Kernighan

2. Go in Motion by William Kennedy, Brian Ketelsen and Erik St. Martin

3. Be taught Go Programming by John Hoover

4. Introducing Go: Construct Dependable, Scalable Packages by Caleb Doxsey

Whereas this works high quality for starters, it shortly turns into repetitive once we’re attempting to curate a listing of sources to be taught a listing of programming languages and tech stacks.

Right here’s the place immediate templates turn out to be useful. You’ll be able to create a template that may be formatted utilizing a number of enter variables.

We will create a easy template to get the highest ok sources to be taught any tech stack. Right here, we use the ok and this as input_variables:

from langchain import PromptTemplate

template = "What are the highest {ok} sources to be taught {this} in 2023?"

immediate = PromptTemplate(template=template,input_variables=['k','this'])

Step 5 – Working Our First LLM Chain

We now have an LLM and a immediate template that we are able to reuse throughout a number of LLM calls.

llm = OpenAI(model_name="text-davinci-003")

immediate = PromptTemplate(template=template,input_variables=['k','this'])

Let’s go forward and create an LLMChain:

from langchain import LLMChain

chain = LLMChain(llm=llm,immediate=immediate)

Now you can cross within the inputs as a dictionary and run the LLM chain as proven:

enter = {'ok':3,'this':'Rust'}

print(chain.run(enter))

Output >>

1. Rust By Instance - Rust By Instance is a good useful resource for studying Rust because it offers a collection of interactive workouts that train you the right way to use the language and its options.

2. Rust E book - The official Rust E book is a complete information to the language, from the fundamentals to the extra superior matters.

3. Rustlings - Rustlings is an effective way to be taught Rust shortly, because it offers a collection of small workouts that allow you to be taught the language step-by-step.

And that’s a wrap! You understand how to make use of LangChain to construct a easy Q&A app. I hope you’ve gained a cursory understanding of LangChain’s capabilities. As a subsequent step, attempt exploring LangChain to construct extra attention-grabbing purposes. Comfortable coding!

Bala Priya C is a technical author who enjoys creating long-form content material. Her areas of curiosity embrace math, programming, and information science. She shares her studying with the developer group by authoring tutorials, how-to guides, and extra.

[ad_2]

Source link